|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

See the introduction to the series

for more information

|

|

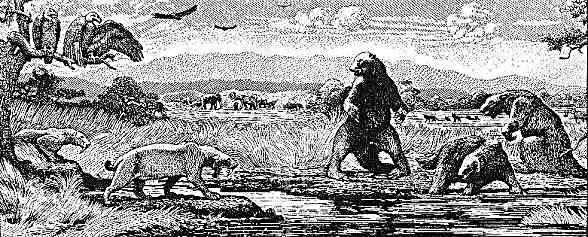

"No scene from prehistory is quite so vivid as that of the mortal struggles of great beasts in the tar pits. The fiercer the struggle, the more entangling the tar, and no beast is so strong or so skillful but that he ultimately sinks. " |

|

|

The Mythical Man-Month. Essays on Software Engineering by Frederick Brooks Jr. Anniversary Edition Paperback, 322 pages Published by Addison-Wesley Pub Co in July 1995.

Contents

Preface

The Tar Pit

The Mythical Man-Month

The Surgical Team

Aristocracy, Democracy, and System Design

The Second-System Effect

Passing the Word

Why Did the Tower of Babel Fail?

Calling the Shot

Ten Pounds in a Five-Pound Sack

The Documentary Hypothesis

Plan to Throw One Away

Sharp Tools

The Whole and the Parts

Hatching a Catastrophe

The Other Face

Epilogue

Notes and references

Index

|

|

The book was first published in 1975 and was based on Fred Brooks experience with the design of operating systems for famous IBM/360 series. It is interesting that OS/360 was generally a failure -- badly designed and in many respects inferior OS.

But hardware of System/360 was really great -- a masterpiece of engineering. and Fred Brooks was responsible at least for one genius decision: to use 8 bit bytes.

Actually both hardware and descendants of operating systems designed for those machines are still sold by IBM (IBM mainframe business).

While OS/360 was inferior from the very beginning it was a huge success as hardware was a huge success (rising tide lifts all boats). And despite being a failed, inferior architecture it survived.

But due to deficiencies of the OS, some really good peaces of System/360 software suits did not faire equally well. For example, PL/1 an innovative programming language that pioneered the use of exceptions (and inspired C) is dead. Despite having initial good complier (Pl/1 F) and later two really brilliantly written compilers (debugging and optimizing), each of which is still a masterpiece of software engineering. At the same time ugly JCL is still used.

Despite not so good accomplishments as the head of the project, Brooks have written a really brilliant book. Most of the material in the book is as relevant today as it was when originally written.

Understandably, though, part of the material is outdated. In the 20th Anniversary Edition, rather than update the original text, which is considered "classical," Brooks has wisely decided to add update chapters. These discuss the issues presented while shedding fresh light from the 90s on them. Personally, I recommend reading the relevant parts of Chapter 18 after reading each previous chapter. Chapter 18 is titled, "Propositions of The Mythical Man-Month: True or False?", and it contains updated information about each of the chapters from 1 to 17.

The book itself still did not lost its value due to the fact that it contains unique observations about large scale software development, the observations that can't be found anywhere else(for example Steve McConnell is just a consultant who never headed large projects). Brooks touches things that are typical. but not highly visible in any complex engineering project. He is my compilation of key points from the book:

When you stop to think about it, this phenomenon is easy to understand. There is an inescapable overhead to yoking up programmers in parallel. The members of the team must "waste time" attending meetings, drafting project plans, exchanging EMAIL, negotiating interfaces, enduring performance reviews, and so on. In any team of more than a few people, at least one member will be dedicated to "supervising" the others, while another member will be dedicated to housekeeping functions such as managing builds, updating Gantt charts, and coordinating everyone's calendar. At Microsoft, there will be at least one team member that just designs T-shirts for the rest of the team to wear. And as the team grows, there is a combinatorial explosion such that the percentage of effort devoted to communication and administration becomes larger and larger.

Anniversary edition contains in addition to the original book his influential 1986 essay "No Silver Bullet" which was originally an invited paper for the IFIP '86 conference in Dublin, and later published in Computer magazine. In this paper, Brooks speculated that no technology will be found, within ten years of its publication (in 1986), which will enhance the process of software development by an order of magnitude. Nine years later, in retrospect, Brooks sadly notes that he was right. Scripting languages changed this a little bit but still this remains true as of November 2009.

Brooks discusses several causes of scheduling failures. The most enduring is his discussion of Brooks's law: Adding manpower to a late software project makes it later. Man-month is a hypothetical unit of work representing the work done by one person in one month; Brooks' law says that the possibility of measuring useful work in man-months is a myth, and is hence the centerpiece of the book.

Complex programming projects cannot be perfectly partitioned into discrete tasks that can be worked on without communication between the workers and without establishing a set of complex interrelationships between tasks and the workers performing them.

Therefore, assigning more programmers to a project running behind schedule will make it even later. This is because the time required for the new programmers to learn about the project and the increased communication overhead will consume an ever increasing quantity of the calendar time available. When n people have to communicate among themselves, as n increases, their output decreases and when it becomes negative the project is delayed further with every person added.

Brooks added "No Silver Bullet - Essence and Accidents of Software Engineering"-and further reflections on it, "'No Silver Bullet' Refired"-to the anniversary edition of The Mythical Man-Month.

Brooks insists that there is no one silver bullet -- "there is no single development, in either technology or management technique, which by itself promises even one order of magnitude [tenfold] improvement within a decade in productivity, in reliability, in simplicity."

The argument relies on the distinction between accidental complexity and essential complexity, similar to the way Amdahl's law relies on the distinction between "strictly serial" and "parallelizable".

The second-system effect proposes that, when an architect designs a second system, it is the most dangerous system they will ever design, because they will tend to incorporate all of the additions they originally did not add to the first system due to inherent time constraints. Thus, when embarking on a second system, an engineer should be mindful that they are susceptible to over-engineering it.

99 little

bugs in the code.

99 little bugs.

Take one down, patch it around.

127 little bugs in the code...[3]

The author makes the observation that in a suitably complex system there is a certain irreducible number of errors. Any attempt to fix observed errors tends to result in the introduction of other errors.

Brooks wrote "Question: How does a large software project get to be one year late? Answer: One day at a time!" Incremental slippages on many fronts eventually accumulate to produce a large overall delay. Continued attention to meeting small individual milestones is required at each level of management.

To make a user-friendly system, the system must have conceptual integrity, which can only be achieved by separating architecture from implementation. A single chief architect (or a small number of architects), acting on the user's behalf, decides what goes in the system and what stays out. The architect or team of architects should develop an idea of what the system should do and make sure that this vision is understood by the rest of the team. A novel idea by someone may not be included if it does not fit seamlessly with the overall system design. In fact, to ensure a user-friendly system, a system may deliberately provide fewer features than it is capable of. The point is that, if a system is too complicated to use, then many of its features will go unused because no one has the time to learn how to use them.

The chief architect produces a manual of system specifications. It should describe the external specifications of the system in detail, i.e., everything that the user sees. The manual should be altered as feedback comes in from the implementation teams and the users.

When designing a new kind of system, a team will design a throw-away system (whether it intends to or not). This system acts as a "pilot plant" that reveals techniques that will subsequently cause a complete redesign of the system. This second, smarter system should be the one delivered to the customer, since delivery of the pilot system would cause nothing but agony to the customer, and possibly ruin the system's reputation and maybe even the company.

Every project manager should create a small core set of formal documents defining the project objectives, how they are to be achieved, who is going to achieve them, when they are going to be achieved, and how much they are going to cost. These documents may also reveal inconsistencies that are otherwise hard to see.

When estimating project times, it should be remembered that programming products (which can be sold to paying customers) and programming systems are both three times as hard to write as simple independent in-house programs.[4] It should be kept in mind how much of the work week will actually be spent on technical issues, as opposed to administrative or other non-technical tasks, such as meetings, and especially "stand-up" or "all-hands" meetings.

To avoid disaster, all the teams working on a project should remain in contact with each other in as many ways as possible-e-mail, phone, meetings, memos etc. Instead of assuming something, implementers should ask the architect(s) to clarify their intent on a feature they are implementing, before proceeding with an assumption that might very well be completely incorrect. The architect(s) are responsible for formulating a group picture of the project and communicating it to others.

Much as a surgical team during surgery is led by one surgeon performing the most critical work, while directing the team to assist with less critical parts, it seems reasonable to have a "good" programmer develop critical system components while the rest of a team provides what is needed at the right time. Additionally, Brooks muses that "good" programmers are generally five to ten times as productive as mediocre ones.

Software is invisible. Therefore, many things only become apparent once a certain amount of work has been done on a new system, allowing a user to experience it. This experience will yield insights, which will change a user's needs or the perception of the user's needs. The system should, therefore, be changed to fulfill the changed requirements of the user. This can only occur up to a certain point, otherwise the system may never be completed. At a certain date, no more changes should be allowed to the system and the code should be frozen. All requests for changes should be delayed until the next version of the system.

Instead of every programmer having his own special set of tools, each team should have a designated tool-maker who may create tools that are highly customized for the job that team is doing, e.g., a code generator tool that creates code based on a specification. In addition, system-wide tools should be built by a common tools team, overseen by the project manager.

There are two techniques for lowering software development costs that Brooks writes about:

Frederick P. Brooks, Jr. is the "father of the IBM System 360". While manager of the 360 project it was Dr. Brooks who specified that a byte would consist of 8 bits and that word consists of 4 bytes. Whether or not you agree with his decision, it's hard to argue that this has not had a huge impact on the computer field.

Mr. Fred Brooks must have been a very high level politician in IBM of his time. Being in command for several thousand programmers is a highly political assignment. And that creates a subtle analogy of the book with Machiavelli's "The Prince".In his time Machiavelli also was a senior civil servant in the Republic of Florence. Later the Medici returned to Florence, the Republic was replaced with an absolute rule and Machiavelli was dismissed from his job. Out of favors, out of job, he found himself under house arrest. The only thing to do was to write a book with his reflections on the process of gaining and maintaining power. Do you think the new rulers were stupid enough to hire a clever civil servant of the previous regime? So people like Fred Brooks and Machiavelli are probably very clever but not clever enough. Fouche and Talleyrand, for that matter, never wrote books -- they were men of action.

No book on software project management has been so influential and so timeless as The Mythical Man-Month. Even now, 20 years after the initial publication it's still a very important.

He was born in 1931 in Durham, North Carolina and grew up in nearby Greenville, NC. He was interested in computers during his teenage years, and went on to double major in math and physics at Duke University. At Harvard, he studied for his Ph.D. under Howard Aiken, the inventor of the early Harvard computers. He joined IBM in 1956, working in Poughskeepie and Yorktown, NY and in 1957 Brooks and Dura Sweeney patented a Stretch interrupt system for the IBM Stretch computer that introduced most features of today's interrupt systems.

In the early 1960s he was the leader of the development team for the IBM 360 Series. The 360 quickly became the most popular mainframe computer on the market. In 1964, Brooks left IBM to found the Computer Science department at the University of North Carolina at Chapel Hill and to become a professor of Computer Science. He has chaired the department for the past twenty years.

Throughout his career, Brooks has authored two books in the field of Computer Science, The Mythical Man-Month: Essays on Software Engineering and Computer Architecture: Concepts and Evolution.

The Mythical-Man Month is undoubtedly his most famous work in which he compiled essays about his work on the IBM 360 Series. It also contains the famous "Silver Bullet Essay" where he likens his work on the 360 Series to a werewolf that can only be killed by a "Silver Bullet." Brooks was also known for his famous aphorisms, his most popular being Brooks' Law: "Adding manpower to a late software project makes it later" Many of his quotes are applicable in a wider context, such as "Complexity is the fatal foe" (P 344).

Brooks is still a professor of Computer Science at UNC today.

See also

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

| News | A Note on the Relationship of Brooks Law and Conway Law | Recommended Links | TOC | Brooks law | No Silver Bullet (essay, included in 2-d edition of TMMM) | |

| Reviews | Excepts | Quotes | Humor | Random Findings | Etc |

The Register

IBM's System 360 mainframe, celebrating its 50th anniversary on Monday, was more than a just another computer. The S/360 changed IBM just as it changed computing and the technology industry.

The digital computers that were to become known as mainframes were already being sold by companies during the 1950s and 1960s - so the S/360 wasn't a first.

Where the S/360 was different was that it introduced a brand-new way of thinking about how computers could and should be built and used.

The S/360 made computing affordable and practical - relatively speaking. We're not talking the personal computer revolution of the 1980s, but it was a step.

The secret was a modern system: a new architecture and design that allowed the manufacturer - IBM - to churn out S/360s at relatively low cost. This had the more important effect of turning mainframes into a scalable and profitable business for IBM, thereby creating a mass market.

The S/360 democratised computing, taking it out of the hands of government and universities and putting its power in the hands of many ordinary businesses.

The birth of IBM's mainframe was made all the more remarkable given making the machine required not just a new way of thinking but a new way of manufacturing. The S/360 produced a corporate and a mental restructuring of IBM, turning it into the computing giant we have today.

The S/360 also introduced new technologies, such as IBM's Solid Logic Technology (SLT) in 1964 that meant a faster and a much smaller machine than what was coming from the competition of the time.

Big Blue introduced new concepts and de facto standards with us now: virtualisation - the toast of cloud computing on the PC and distributed x86 server that succeeded the mainframe - and the 8-bit byte over the 6-bit byte.

The S/360 helped IBM see off a rising tide of competitors such that by the 1970s, rivals were dismissively known as "the BUNCH" or the dwarves. Success was a mixed blessing for IBM, which got in trouble with US regulators for being "too" successful and spent a decade fighting a government anti-trust law suit over the mainframe business.

The legacy of the S/360 is with us today, outside of IBM and the technology sector.

naylorjs

S/360 I knew you well

The S/390 name is a hint to its lineage, S/360 -> S/370 -> S/390, I'm not sure what happened to the S/380. Having made a huge jump with S/360 they tried to do the same thing in the 1970s with the Future Systems project, this turned out to be a huge flop, lots of money spent on creating new ideas that would leapfrog the competition, but ultimately failed. Some of the ideas emerged on the System/38 and onto the original AS/400s, like having a query-able database for the file system rather than what we are used to now.

The link to NASA with the S/360 is explicit with JES2 (Job Execution Subsystem 2) the element of the OS that controls batch jobs and the like. Messages from JES2 start with the prefix HASP, which stands for Houston Automatic Spooling Program.

As a side note, CICS is developed at Hursley Park in Hampshire. It wasn't started there though. CICS system messages start with DFH which allegedly stands for Denver Foot Hills. A hint to its physical origins, IBM swapped the development sites for CICS and PL/1 long ago.

I've not touched an IBM mainframe for nearly twenty years, and it worries me that I have this information still in my head. I need to lie down!

Ross Nixon

Re: S/360 I knew you well

I have great memories of being a Computer Operator on a 360/40. They were amazing capable and interesting machines (and peripherals).

QuiteEvilGraham

Re: S/360 I knew you well

ESA is the bit that you are missing - the whole extended address thing, data spaces,hyperspaces and cross-memory extensions.

Fantastic machines though - I learned everything I know about computing from Principals of Operations and the source code for VM/SP - they used to ship you all that, and send you the listings for everything else on microfiche. I almost feel sorry for the younger generations that they will never see a proper machine room with the ECL water-cooled monsters and attendant farms of DASD and tape drives. After the 9750's came along they sort of look like very groovy American fridge-freezers.

Mind you, I can get better mippage on my Thinkpad with Hercules than the 3090 I worked with back in the 80's, but I couldn't run a UK-wide distribution system, with thousands of concurrent users, on it.

Nice article, BTW, and an upvote for the post mentioning The Mythical Man Month; utterly and reliably true.

Happy birthday IBM Mainframe, and thanks for keeping me in gainful employment and beer for 30 years!

Anonymous Coward

Re: S/360 I knew you well

I stated programming (IBM 360 67) and have programmed several IBM mainframe computers. One of the reason for the ability to handle large amounts of data is that these machines communicate to terminals in EBCDIC characters, which is similar to ASCII. It took very few of these characters to program the 3270 display terminals, while modern X86 computers use a graphical display and need a lot data transmitted to paint a screen. I worked for a company that had an IBM-370-168 with VM running both os and VMS. We had over 1500 terminals connected to this mainframe over 4 states. IBM had visioned that VM/CMS. CICS was only supposed to be a temporary solution to handling display terminals, but it became the main stay in many shops. Our shop had over 50 3330 300 meg disk drives online with at least 15 tape units. These machines are in use today, in part, because the cost of converting to X86 is prohibitive. On these old 370 CICS, the screens were separate from the program. JCL (job control language) was used to initiate jobs, but unlike modern batch files, it would attach resources such as a hard drive or tape to the program. This is totally foreign to any modern OS. Linux or Unix can come close but MS products are totally different.

Stephen Channell

Re: S/360 I knew you well

S/380 was the "future systems program" that was cut down to the S/38 mini.

HASP was the original "grid scheduler" in Houston running on a dedicated mainframe scheduling work to the other 23 mainframes under the bridge.. I nearly wet myself with laughter reading Data-Synapse documentation and their "invention" of a job-control-language. 40 years ago HASP was doing Map/Reduce to process data faster than a tape-drive could handle.

If we don't learn the lessons of history, we are destined to IEFBR14!

Pete 2

Come and look at this!

As a senior IT bod said to me one time, when I was doing some work for a mobile phone outfit.

"it's an IBM engineer getting his hands dirty".

And so it was: a hardware guy, with his sleeves rolled up and blood grime on his hands, replacing a failed board in an IBM mainframe.

The reason it was so noteworthy, even in the early 90's was because it was such a rare occurrence. It was probably one of the major selling points of IBM computers (the other one, with just as much traction, is the ability to do a fork-lift upgrade in a weekend and know it will work.) that they didn't blow a gasket if you looked at them wrong.

The reliability and compatibility across ranges is why people choose this kit. It may be arcane, old-fashioned, expensive and untrendy - but it keeps on running.

The other major legacy of OS/360 was, of course, The Mythical Man Month who's readership is stil the most reliable way of telling the professional IT managers from the wannabees who only have buzzwords as a knowledge base.

Amorous Cowherder

Re: Come and look at this!

They were bloody good guys from IBM!

I started off working on mainframes around 1989, as graveyard shift "tape monkey" loading tapes for batch jobs. My first solo job was as a Unix admin on a set of RS/6000 boxes, I once blew out the firmware and a test box wouldn't boot. I called out an IBM engineer after I completely "futzed" the box, he came out and spent about 2 hours with me teaching me how to select and load the correct firmware. He then spent another 30 mins checking my production system with me and even left me his phone number so I call him directly if I needed help when I did the production box. I did the prod box with no issues because of the confidence I got and the time he spent with me. Cheers!

David Beck

Re: 16 bit byte?

The typo must be fixed, the article says 6-bit now. The following is for those who have no idea what we are talking about.

Generally machines prior to the S/360 were 6-bit if character or 36-bit if word oriented. The S/360 was the first IBM architecture (thank you Dr's Brooks, Blaauw and Amdahl) to provide both data types with appropriate instructions and to include a "full" character set (256 characters instead of 64) and to provide a concise decimal format (2 digits in one character position instead of 1) 8-bits was chosen as the "character" length. It did mean a lot of Fortran code had to be reworked to deal with 32-bit single precision or 32 bit integers instead of the previous 36-bit.

If you think the old ways are gone, have a look at the data formats for the Unisys 2200.

John HughesVirtualisation

Came with the S/370, not the S/360, which didn't even have virtual memory.

Steve Todd

Re: Virtualisation

The 360/168 had it, but it was a rare beast.

Mike 140

Re: Virtualisation

Nope. CP/67 was the forerunner of IBM's VM. Ran on S/360

David Beck

Re: Virtualisation

S/360 Model 67 running CP67 (CMS which became VM) or the Michigan Terminal System. The Model 67 was a Model 65 with a DAT box to support paging/segmentation but CP67 only ever supported paging (I think, it's been a few years).

Steve Todd

Re: Virtualisation

The 360/168 had a proper MMU and thus supported virtual memory. I interviewed at Bradford university, where they had a 360/168 that they were doing all sorts of things that IBM hadn't contemplated with (like using conventional glass teletypes hooked to minicomputers so they could emulate the page based - and more expensive - IBM terminals).

I didn't get to use an IBM mainframe in anger until the 3090/600 was available (where DEC told the company that they'd need a 96 VAX cluster and IBM said that one 3090/600J would do the same task). At the time we were using VM/TSO and SQL/DS, and were hitting 16MB memory size limits.

Peter Gathercole

Re: Virtualisation @Steve Todd

I'm not sure that the 360/168 was a real model. The Wikipedia article does not think so either.

As far as I recall, the only /168 model was the 370/168, one of which was at Newcastle University in the UK, serving other Universities in the north-east of the UK, including Durham (where I was) and Edinburgh.

They also still had a 360/65, and one of the exercises we had to do was write some JCL in OS/360. The 370 ran MTS rather than an IBM OS.

Grumpy Guts

Re: Virtualisation

You're right. The 360/67 was the first VM - I had the privelege of trying it out a few times. It was a bit slow though. The first version of CP/67 only supported 2 terminals I recall... The VM capability was impressive. You could treat files as though they were in real memory - no explicit I/O necessary.

Chris Miller

This was a big factor in the profitability of mainframes. There was no such thing as an 'industry-standard' interface - either physical or logical. If you needed to replace a memory module or disk drive, you had no option* but to buy a new one from IBM and pay one of their engineers to install it (and your system would probably be 'down' for as long as this operation took). So nearly everyone took out a maintenance contract, which could easily run to an annual 10-20% of the list price. Purchase prices could be heavily discounted (depending on how desperate your salesperson was) - maintenance charges almost never were.

* There actually were a few IBM 'plug-compatible' manufacturers - Amdahl and Fujitsu. But even then you couldn't mix and match components - you could only buy a complete system from Amdahl, and then pay their maintenance charges. And since IBM had total control over the interface specs and could change them at will in new models, PCMs were generally playing catch-up.

David Beck

Re: Maintenance

So true re the service costs, but "Field Engineering" as a profit centre and a big one at that. Not true regarding having to buy "complete" systems for compatibility. In the 70's I had a room full of CDC disks on a Model 40 bought because they were cheaper and had a faster linear motor positioner (the thing that moved the heads), while the real 2311's used hydraulic positioners. Bad day when there was a puddle of oil under the 2311.

John Smith

@Chris Miller

"This was a big factor in the profitability of mainframes. There was no such thing as an 'industry-standard' interface - either physical or logical. If you needed to replace a memory module or disk drive, you had no option* but to buy a new one from IBM and pay one of their engineers to install it (and your system would probably be 'down' for as long as this operation took). So nearly everyone took out a maintenance contract, which could easily run to an annual 10-20% of the list price. Purchase prices could be heavily discounted (depending on how desperate your salesperson was) - maintenance charges almost never were."

True.

Back in the day one of the Scheduler software suppliers made a shed load of money (the SW was $250k a pop) by making new jobs start a lot faster and letting shops put back their memory upgrades by a year or two.

Mainframe memory was expensive.

Now owned by CA (along with many things mainframe) and so probably gone to s**t.

tom dial

Re: Maintenance

Done with some frequency. In the DoD agency where I worked we had mostly Memorex disks as I remember it, along with various non-IBM as well as IBM tape drives, and later got an STK tape library. Occasionally there were reports of problems where the different manufacturers' CEs would try to shift blame before getting down to the fix.

I particularly remember rooting around in a Syncsort core dump that ran to a couple of cubic feet from a problem eventually tracked down to firmware in a Memorex controller. This highlighted the enormous I/O capacity of these systems, something that seems to have been overlooked in the article. The dump showed mainly long sequences of chained channel programs that allowed the mainframe to transfer huge amounts of data by executing a single instruction to the channel processors, and perform other possibly useful work while awaiting completion of the asynchronous I/O.

Mike Pellatt

Re: Maintenance

@ChrisMiller - The IBM I/O channel was so well-specified that it was pretty much a standard. Look at what the Systems Concepts guys did - a Dec10 I/O and memory bus to IBM channel converter. Had one of those in the Imperial HENP group so we could use IBM 6250bpi drives as DEC were late to market with them. And the DEC 1600 bpi drives were horribly unreliable.

The IBM drives were awesome. It was always amusing explaining to IBM techs why they couldn't run online diags. On the rare occasions when they needed fixing.

David Beck

Re: Maintenance

It all comes flooding back.

A long CCW chain, some of which are the equivalent of NOP in channel talk (where did I put that green card?) with a TIC (Transfer in Channel, think branch) at the bottom of the chain back to the top. The idea was to take an interrupt (PCI) on some CCW in the chain and get back to convert the NOPs to real CCWs to continue the chain without ending it. Certainly the way the page pool was handled in CP67.

And I too remember the dumps coming on trollies. There was software to analyse a dump tape but that name is now long gone (as was the origin of most of the problems in the dumps). Those were the days I could not just add and subtract in hex but multiply as well.

Peter SimpsonThe Mythical Man-Month

Fred Brooks' seminal work on the management of large software projects, was written after he managed the design of OS/360. If you can get around the mentions of secretaries, typed meeting notes and keypunches, it's required reading for anyone who manages a software project. Come to think of it...*any* engineering project. I've recommended it to several people and been thanked for it.

// Real Computers have switches and lights...

Madeye

The Mythical Man-Month

The key concepts of this book are as relevant today as they were back in the 60s and 70s - it is still oft quoted ("there are no silver bullets" being one I've heard recently). Unfortunately fewer and fewer people have heard of this book these days and even fewer have read it, even in project management circles.

WatAWorld

Was IBM ever cheaper?

I've been in IT since the 1970s.

My understanding from the guys who were old timers when I started was the big thing with the 360 was the standardized Op Codes that would remain the same from model to model, with enhancements, but never would an Op Code be withdrawn.

The beauty of IBM s/360 and s/370 was you had model independence. The promise was made, and the promise was kept, that after re-writing your programs in BAL (360's Basic Assembler Language) you'd never have to re-code your assembler programs ever again.

Also the re-locating loader and method of link editing meant you didn't have to re-assemble programs to run them on a different computer. Either they would simply run as it, or they would run after being re-linked. (When I started, linking might take 5 minutes, where re-assembling might take 4 hours, for one program. I seem to recall talk of assemblies taking all day in the 1960s.)

I wasn't there in the 1950s and 60s, but I don't recall any one ever boasting at how 360s or 370s were cheaper than competitors.

IBM products were always the most expensive, easily the most expensive, at least in Canada.

But maybe in the UK it was like that. After all the UK had its own native computer manufacturers that IBM had to squeeze out despite patriotism still being a thing in business at the time.

PyLETSCut my programming teeth on S/390 TSO architecture

We were developing CAD/CAM programs in this environment starting in the early eighties, because it's what was available then, based on use of this system for stock control in a large electronics manufacturing environment. We fairly soon moved this Fortran code onto smaller machines, DEC/VAX minicomputers and early Apollo workstations. We even had an early IBM-PC in the development lab, but this was more a curiosity than something we could do much real work on initially. The Unix based Apollo and early Sun workstations were much closer to later PCs once these acquired similar amounts of memory, X-Windows like GUIs and more respectable graphics and storage capabilities, and multi-user operating systems.

Gordon 10

Ahh S/360 I knew thee well

Cut my programming teeth on OS/390 assembler (TPF) at Galileo - one of Amadeus' competitors.

I interviewed for Amadeus's initial project for moving off of S/390 in 1999 and it had been planned for at least a year or 2 before that - now that was a long term project!

David Beck

Re: Ahh S/360 I knew thee well

There are people who worked on Galileo still alive? And ACP/TPF still lives, as zTPF? I remember a headhunter chasing me in the early 80's for a job in OZ, Quantas looking for ACP/TPF coders, $80k US, very temping.

You can do everything in 2k segments of BAL.

Anonymous IV

No mention of microcode?

Unless I missed it, there was no reference to microcode which was specific to each individual model of the S/360 and S/370 ranges, at least, and provided the 'common interface' for IBM Assembler op-codes. It is the rough equivalent of PC firmware. It was documented in thick A3 black folders held in two-layer trolleys (most of which held circuit diagrams, and other engineering amusements), and was interesting to read (if not understand). There you could see that the IBM Assembler op-codes each translated into tens or hundreds of microcode machine instructions. Even 0700, NO-OP, got expanded into surprisingly many machine instructions.

John Smith 19

Re: No mention of microcode?

"I first met microcode by writing a routine to do addition for my company's s/370. Oddly, they wouldn't let me try it out on the production system :-)"

I did not know the microcode store was writeable.

Microcode was a core (no pun intended) feature of the S/360/370/390/4030/z architecture.

It allowed IBM to trade actual hardware (EG a full spec hardware multiplier) for partial (part word or single word) or completely software based (microcode loop) depending on the machines spec (and the customers pocket) without needing a re compile as at the assembler level it would be the same instruction.

I'd guess hacking the microcode would call for exceptional bravery on a production machine.

Arnaut the less

Re: No mention of microcode? - floppy disk

Someone will doubtless correct me, but as I understood it the floppy was invented as a way of loading the microcode into the mainframe CPU.

tom dial

The rule of thumb in use (from Brooks's Mythical Man Month, as I remember) is around 5 debugged lines of code per programmer per day, pretty much irrespective of the language. And although the end code might have been a million lines, some of it probably needed to be written several times: another memorable Brooks item about large programming projects is "plan to throw one away, because you will."

Tom Welsh

Programming systems product

The main reason for what appears, at first sight, low productivity is spelled out in "The Mythical Man-Month". Brooks freely concedes that anyone who has just learned to program would expect to be many times more productive than his huge crew of seasoned professionals. Then he explains, with the aid of a diagram divided into four quadrants. Top left, we have the simple program. When a program gets big and complex enough, it becomes a programming system, which takes a team to write it rather than a single individual. And that introduces many extra time-consuming aspects and much overhead. Going the other way, writing a simple program is far easier than creating a product with software at its core. Something that will be sold as a commercial product must be tested seven ways from Sunday, made as maintainable and extensible as possible, be supplemented with manuals, training courses, and technical support services, etc. Finally, put the two together and you get the programming systems product, which can be 100 times more expensive and time-consuming to create than an equivalent simple program.

Tom Welsh

"Why won't you DIE?"

I suppose that witty, but utterly inappropriate, heading was added by an editor; Gavin knows better. If anyone is in doubt, the answer would be the same as for other elderly technology such as houses, roads, clothing, cars, aeroplanes, radio, TV, etc. Namely, it works - and after 50 years of widespread practical use, it has been refined so that it now works *bloody well*. In extreme contrast to many more recent examples of computing innovation, I may add.

Whoever added that ill-advised attempt at humour should be forced to write out 1,000 times:

"The definition of a legacy system: ONE THAT WORKS".

Grumpy Guts

Re: Pay Per Line Of Code

I worked for IBM UK in the 60s and wrote a lot of code for many different customers. There was never a charge. It was all part of the built in customer support. I even rewrote part of the OS for one system (not s/360 - IBM 1710 I think) for Rolls Royce aero engines to allow all the user code for monitoring engine test cells to fit in memory.

dlc.usa

Sole Source For Hardware?

Even before the advent of Plug Compatible Machines brought competition for the Central Processing Units, the S/360 peripheral hardware market was open to third parties. IBM published the technical specifications for the bus and tag channel interfaces allowing, indeed, encouraging vendors to produce plug and play devices for the architecture, even in competition with IBM's own. My first S/360 in 1972 had Marshall not IBM disks and a Calcomp drum plotter for which IBM offered no counterpart. This was true of the IBM Personal Computer as well. This type of openness dramatically expands the marketability of a new platform architecture.

RobHib

Eventually we stripped scrapped 360s for components.

"IBM built its own circuits for S/360, Solid Logic Technology (SLT) - a set of transistors and diodes mounted on a circuit twenty-eight-thousandths of a square inch and protected by a film of glass just sixty-millionths of an inch thick. The SLT was 10 times more dense the technology of its day."

When these machines were eventually scrapped we used the components from them for electronic projects. Their unusual construction was a pain, much of the 'componentry' couldn't be used because of the construction. (That was further compounded by IBM actually partially smashing modules before they were released as scrap.)

"p3 [Photo caption] The S/360 Model 91 at NASA's Goddard Space Flight Center, with 2,097,152 bytes of main memory, was announced in 1968"

Around that time our 360 only had 44kB memory, it was later expanded to 77kB in about 1969. Why those odd values were chosen is still somewhat a mystery to me.

David Beck

Re: Eventually we stripped scrapped 360s for components.

@RobHib-The odd memory was probably the size of the memory available for the user, not the hardware size (which came in powers of 2 multiples). The size the OS took was a function of what devices were attached and a few other sysgen parameters. Whatever was left after the OS was user space. There was usually a 2k boundary since memory protect keys worked on 2k chunks, but not always, some customers ran naked to squeeze out those extra bytes.

Glen Turner 666

Primacy of software

Good article.

Could have had a little more about the primacy of software: IBM had a huge range of compliers, and having an assembling language common across a wide range was a huge winner (as obvious as that seems today in an age of a handful of processor instruction sets). Furthermore, IBM had a strong focus on binary compatibility, and the lack of that with some competitor's ranges made shipping software for those machines much more expensive than for IBM.

IBM also sustained that commitment to development. Which meant that until the minicomputer age they were really the only possibility if you wanted newer features (such as CICS for screen-based transaction processing or VSAM or DB2 for databases, or VMs for a cheaper test versus production environment). Other manufacturers would develop against their forthcoming models, not their shipped models, and so IBM would be the company "shipping now" with the feature you desired.

IBM were also very focussed on business. They knew how to market (eg, the myth of 'idle' versus 'ready' light on tape drives, whitepapers to explain technology to managers). They knew how to charge (eg, essentially a lease, which matched company's revenue). They knew how to do politics (eg, lobbying the Australian PM after they lost a government sale). They knew how to do support (with their customer engineers basically being a little bit of IBM embedded at the customer). Their strategic planning is still world class.

I would be cautious about lauding the $0.5B taken to develop the OS/360 software as progress. As a counterpoint consider Burroughs, who delivered better capability with less lines of code, since they wrote in Algol rather than assembler. Both companies got one thing right: huge libraries of code which made life much easier for applications programmers. DEC's VMS learnt that lesson well. It wasn't until MS-DOS that we were suddenly dropped back into an inferior programming environment (but you'll cope with a lot for sheer responsiveness, and it didn't take too long until you could buy in what you needed).

What killed the mainframe was its sheer optimisation for batch and transaction processing and the massive cost if you used it any other way. Consider that TCP/IP used about 3% of the system's resources, or $30k pa of mainframe time. That would pay for a new Unix machine every year to host your website on.

Retrospective Notes

First-time readers of this rant should be aware that it was written well before either the Halloween Documents or AOL/Sun acquisition of Netscape. For all I know, at this writing, some other relevant event is erupting. Sun's quasi-open-source licensing of Java might even be considered germane.In any case: I disclaim any powers of prophecy that might be inferred from some skewed reading of what follows. That's a little like reading the Book of Revelations as predicting Monica Lewinsky (the Whore of Babble-On, forsooth!) and this Y2K fiasco (yea, verily, thy Systems will Tremble and their Bowels will Loosen before the Wrath of the Two-Thousand-Year-Old Lamb.)

I also want people to know: I like what's happening with Open Source, and I'm glad that it has such an articulate and intelligent spokesman in Eric Raymond. My expressions of doubt--both serious and tongue-in-cheek--shouldn't be taken as disrespect for the process or the person.

I wrote this for fun. So please read it that way.

I also disclaim any influence on events. No, I don't know if Mr. Villaincantelope-or-whatever-his-name-is found some kind of backhanded inspiration in this piece. It's quite enough that Eric Raymond mentioned Fred Brooks' attribution of Bazaar-like practices to Microsoft; I entirely trust Microsoft employees to take even the most mud-besmeared ball and run with it, good little team-players that they are. I just doubt that they could even find a ball under all the mud you'll see here.

With all that clarified....let's MUDWRESTLE!

The Browser Wars Continued: Children's Crusade vs. Shi'ite Jihad?

Eric Raymond is going to be a Netceleb. Marc Andreesen virtually guarantees this. The developer consensus within Netscape will propel the author of "The Cathedral and The Bazaar" almost to household-name level of public visibility. Netscape will publish its browser source, Agoric processes will save the day, Microsoft's tidal advance will be stemmed.Or will it?

I have mused on Eric Raymond's choice of "Cathedral" and "Bazaar" as juxtaposed metaphors for the two different styles. Historically, the one is Christian, the other Islamic.

Much could be made of this.

Allow me.

Religious Architecture and Social Archetypes

In Christian countries, the Cathedral was the source of ecclesiastical power, and to some extent it remains so. As a political power, however, its role has gone from nearly central to nearly non-existent. Nobody is building Cathedrals anymore, so that pork-barrel is long empty. And nothing has taken its place.

In Islamic countries, the bazaar - which is seldom, if ever, co-located with any related job-producing construction projects -- remains the center of ecclesiastical power. In self-styled Islamic Republics, the bazaar is therefore the center of power, period.

Perhaps the theocratic overtones in the choice of the term Cathedral was Eric Raymond's allusion to what many see as the doctrinaire and sanctimonious rumblings from RMS the Pope, versus the more freewheeling and (in strict GNU terms) easily-corrupted style of Linux development. And perhaps in choosing the term "bazaar" Raymond meant only to suggest something more social and economic and mundane; something that was, architecturally, at least, more low-to-the-ground. He refers to Eric Drexler's notions of Agoric systems, saying that he thought of entitling the essay "The Cathedral and the Agora." So maybe none of this has anything to do with the taint of theocracy.

But maybe everyone should (re)read God and Golem, a book written Norbert Wiener, the coiner of the term "cybernetics." In all things computer-related, ecclesiarchs are not far off.

If you want evidence that the bazaar style is easily corrupted and/or prone to its own problems of self-destructive, dogma-inspired holy wars, you need look no further than Microsoft itself.

Yes, Microsoft.

The Gospel According to Fred

Eric Raymond apparently has glanced as far afield as Redmond in seeking precedents, though apparently with only a shudder and a giggle so far. "For Further Reading" leads off with this:

I quoted several bits from Frederick P. Brooks's classic The Mythical Man-Month because, in many respects, his insights have yet to be improved upon.... The new edition is wrapped up by an invaluable 20-years-later retrospective in which Brooks forthrightly admits to the few judgements in the original text which have not stood the test of time. I first read the retrospective after this paper was substantially complete, and was surprised to discover that Brooks attributes bazaar-like practices to Microsoft!A careful reading of Brooks's 25-year retrospective turns up another, crueler, irony. One of the few points where Brooks concedes a probable error of judgment is in his original insistence that all the information about OS/360 development be made available to everyone on the project.

Information should be free. At IBM. In the mid-60s.

Well. How clueful.

But now he says he was wrong?

That Stained-Glass Window is a Module - MY Module

At some point during this famously-late project, Brooks' policy of providing all information to every programmer meant filling wide shelves in every office with the official Project Notebook, and providing updates to it constantly. This, Brooks now feels, was a disaster.

He should, he says now, have gone along with what was, at the time, a novel software engineering concept: modularization through "Information Hiding". Presumably this would have been implemented by restricting the flow of information within the development organization itself.

It surprises me that Brooks wouldn't now consider, after the fact, that promoting an ethic (or at least, an aesthetic) of modularization in an open organization would be superior to enforcing (perhaps by prohibition) laws of modularization in a closed one.

One suspects that Brooks's Cathedral dreams never died. References to medieval architecture and Christian spirituality abound in his book. To have made OS/360 a true Cathedral - why, he'd be an IBM Cardinal today! A Saint, even! (Or CEO emeritus, anyway.) It seems he now feels that, besides tolerating poor architecture, he erred in leaving out a crucial Cathedral-building management technique: tolerance of internal trade secrets.

Medieval craft guilds were notoriously secretive about their processes, and Cathedrals provided not only pork barrels during lean times, but perhaps an early version of the R&D Tax Credit. But what damn good is that subsidy if your research failures become widely known while your successes aren't safe from pilfering by other guilds?

Poor Fred. Devout IBM Christian to the end.

He missed a trick. To be Pope in a Cathedral-as-pork-barrel economy, you have to be wily, political, amoral. You have to be the broker-of-secrets, which means you have to have secrets worth having.

You don't have to be actually evil, but it sure helps!

Death to the Infidels! (Uh, You're on Our Side, Right?)

Now we have FTP sites via the Internet and patchfiles distributed on newsgroups, rather than the OS/360 Project Notebook maintained by armies of documentation clerks. But apart from that, I'm hard put to see a major difference in social organization.

Yes, this freedom-of-information on the OS/360 project was IBM-internal, but so what? There were literally thousands of people working on OS/360 at one point, quite a sizeable community. Maybe larger than the number of people who have ever contributed a line of code to GNU/Linux. Why would such a community look outside itself? After all, even outside, except for a few niches, IBM was the world of computing. Information wants to be free, I suppose, but programmers want to be paid. OS/360 programmers had everything they could have wanted. Nice salaries. A prestigious host organization. A large community of similarly-spirited co-workers. Access to all the information they needed to do their jobs (and much more.) Not to mention that there were - by virtue of Brook's early, and now openly regretted, decision to triage "conceptual integrity" in the interests of getting everybody busy - lots of opportunities for creative (if not terribly original) work.

What a revelation. Fred Brooks, Bazaar Model developer. Way back in the mid-60s. But now, more amazingly, a lapsed Bazaar Model developer (if only by conflating information-hiding in software with information-hiding in organizations.) Who'd've thunk it?

I find it very persuasive that Microsoft is, internally, a bazaar- model development organization, a la OS/360. Knowing what we now know about how the Bazaar Model works, it helps explain Microsoft's enduring success. Not to mention all the feeping creaturism.

Comparative Religion: Refining the Taxonomy

To reiterate: In Islamic Republics, the center of powerful ideas is the community of religious scholars and ecclesiarchs, which locates itself in the center of economic power in those countries, and sets the terms of trade within those countries to a great extent. In the extreme cases (Iran), the insularity and self-righteousness runs to truly fanatical extremes.

Too bad the Mullahs aren't in Janet Reno's jurisdiction, huh?

It might, from this point onward, become necessary to make some finer distinctions in our use of the term "Bazaar Model". Prior to what may be a prematurely-characterized Netscape Enlightenment, we can clearly distinguish these two:

1. Fundamentalist Shi'ite Bazaar Model

Microsoft is the paragon now, but it seems to have independently re-evolved Project OS/360 characteristics.

In my brief, ill-fated, stint at Taligent, the same mentality - and the same OS/360 diseases - seemed prevalent. Parallels abound -- new processor architecture, incoherent OS architecture (too many architects), too many projects, little outsourcing, and...IBM was involved! (Hey, maybe Taligent was all just an IBM plot to weaken Apple! Who else would know better than IBM that it could never work? But...nah. Never attribute to malice what can be perfectly well explained by stupidity.)

I suspect the same problems will recur in any corporate-captive programming community that reaches a certain size with a common focus. SGI? Sybase? Both have repeated the history of OS/360 in recent years. Oracle's NC thrust appears to have been a similarly hubristic move.

Ayatollahs? Hoo boy, do they ever have Ayatollahs. "We want 100% of the market." How will it all work out?

Easy. Just remember: "God is great."

2. Baptist Church-Social Bazaar Model

Linux, notably, but also the BSD sects. There is religiousity in the air, but - within decent Protestant limits - a tolerance for diversity.

Commercial vendors of a size modest enough to be admitted to the community (Red Hat, Debian, etc.), are well-regarded, by and large. Main Street is OK - anything bigger is suspect.

Most people still need day jobs, though. How will it all work out?

Easy. Just remember: "God will provide."

But there's a stir in the churchyard. Somebody's arriving.

With Netscape throwing its hat into the ring (or is the free software community throwing its hat into theirs?) ...well, as Eric Raymond says, "this is the Big Time."

Or is it?

Pizza! Pepsi! Stock Options! Hallelujah!

What does it mean, exactly, for the vendor of a popular piece of commercial software to show up at the Baptist Church Social, and declare itself ready for immersion in the waters of the Holy Spirit - provided it's good for business? Especially a vendor that has been "Embracing and Extending" HTML as feverishly (and stupidly) as those Shi'ite Fundamentalists in Redmond?

Yep, you guessed it. You get

3. The Marc Andreesen Reformed Church of the Almighty Dollar Church-Social Bazaar Model

All bazaar booths are allocated by Netscape.

All bazaar merchandise must be tagged "brought to you by Netscape." Special branding irons are used in the case of cotton candy.

Modest-sized vendors who don't sign license agreements with weasel-wording will be strapped into the "dunk bozo" seat, and the top-intellectual property law firms of the Valley will take turns pitching the baseballs to see who can tank them.

Most people still need day jobs, though. How will it all work out?

Easy. Just remember:

"God is cool."

Now let us pray.

August 17, 2010 | Dot Mac

My experience as a programmer has taught me a few things about writing software. Here are some things that people might find surprising about writing code:

- A programmer spends about 10-20% of his time writing code, and most programmers write about 10-12 lines of code per day that goes into the final product, regardless of their skill level. Good programmers spend much of the other 90% thinking, researching, and experimenting to find the best design. Bad programmers spend much of that 90% debugging code by randomly making changes and seeing if they work.

"A great lathe operator commands several times the wage of an average lathe operator, but a great writer of software code is worth 10,000 times the price of an average software writer." Bill Gates- A good programmer is ten times more productive than an average programmer. A great programmer is 20-100 times more productive than the average. This is not an exaggeration studies since the 1960′s have consistently shown this. A bad programmer is not just unproductive he will not only not get any work done, but create a lot of work and headaches for others to fix.

- Great programmers spend very little of their time writing code at least code that ends up in the final product. Programmers who spend much of their time writing code are too lazy, too ignorant, or too arrogant to find existing solutions to old problems. Great programmers are masters at recognizing and reusing common patterns. Good programmers are not afraid to refactor (rewrite) their code constantly to reach the ideal design. Bad programmers write code which lacks conceptual integrity, non-redundancy, hierarchy, and patterns, and so is very difficult to refactor. It's easier to throw away bad code and start over than to change it.

- Software obeys the laws of entropy, like everything else. Continuous change leads to software rot, which erodes the conceptual integrity of the original design. Software rot is unavoidable, but programmers who fail to take conceptual integrity into consideration create software that rots so so fast that it becomes worthless before it is even completed. Entropic failure of conceptual integrity is probably the most common reason for software project failure. (The second most common reason is delivering something other than what the customer wanted.) Software rot slows down progress exponentially, so many projects face exploding timelines and budgets before they are killed.

- A 2004 study found that most software projects (51%) will fail in a critical aspect, and 15% will fail totally. This is an improvement since 1994, when 31% failed.

- Although most software is made by teams, it is not a democratic activity. Usually, just one person is responsible for the design, and the rest of the team fills in the details.

- Programming is hard work. It's an intense mental activity. Good programmers think about their work 24/7. They write their most important code in the shower and in their dreams. Because the most important work is done away from a keyboard, software projects cannot be accelerated by spending more time in the office or adding more people to a project.

Edward D. Weinberger:

Much of the content of this blog was originally posted in the classic THE MYTHICAL MAN MONTH, by Fredric Brooks. He was the guy that headed the IBM project to build the first modern operating system, OS/360, on IBM Mainframes in the 1960′s, so he clearly knew his stuff. He is convinced of the vast difference between the best and the average.

Believe it or not, the reason why managers make more than programmers is well captured by the comic strip DILBERT. Though Dilbert is technically adept, he clearly does not understand the business world. He therefore needs the "adult supervision" of the pointy-haired boss. Admittedly, the boss is an idiot, with absolutely NO technical savvy; however, he does understand the business world, including the importance of marketing.

And one other thing. Programmers are paid for more than productivity, which is why productive programmers are not necessarily paid more than others. The technology is changing so fast that the guy who may be a mediocre user of a hot technology gets paid more than the best COBOL programmer in the world, simply because nobody cares much about COBOL any more.Maintenance Man:

A great developer may be worth a lot more than an average one. Might also be 100 times as productive. Then why again is the great one getting paid the same salary as the average one?

jambox:

@MaintenanceMan Too many companies allow their software products to be managed by non-technical managers. They simply do not know who is good and who isn't! They also don't know good software from bad and evaluate performance based on speed of delivery most of all. They're also acutely vulnerable to bafflegibber.

Lou:

Thank you; good article. I recently worked (past tense) at a place that thought you could in fact speed software delivery by adding extra programmers and extra hours per programmer. Programming is not manual labor. Physical bodies can continue laboring long after the mind shuts off whereas in programming, when the mind shuts off, you may code so ineffectively that you make negative progress. Bless the software managers that understand the pacing and rhythm of development.

@Maintenance Man, that's a good question. I think it's partly due to a lack of understanding on the part of managers and companies. As soon as it's easy to quantify the increased value of a good programmer vs a bad one, the salary gap will increase.

Paul W. Homer:

Nice. Although I'm still a little unsure of the first two points. In the short run, I think a great programmer is not all that much more productive than an average one, but I definitely believe that if you factor in time and the amount of code that actually stays alive the difference is huge. Also, I could agree with the 10-12 lines per day, if you are talking averages. I know a lot of programmers who (without cutting and pasting) can produce thousands of lines in a very intense week, but who then slack shortly afterwards.

Jeff Dege:

I'm seeing that "good programmers are X-times more productive than bad programmers" meme, again, and again without a description of the shapes of the curve. This leaves people with misconceptions.

Productivity is not a normal distribution, it's a Rayleigh distribution. If good programmers are ten times better than bad programmers, the distribution will be such that good programmers are twice as good as average programmers, and average programmers are five times as good as bad programmers.

The curve is skewed, and the median is above the mean. (Meaning that most programmers are better than average, as odd as that sounds.)

J:

I love reading something like this and then hear people talk about how this just proves how great they are at programming. No one ever seems to read and say, "Hmm I wonder if I'm the guy that is a tenth as productive as good programmers?" On another note, although I agree with some generalizations, I think it is dangerous to put programmers in three categories: bad, good, and great. There is a lot of wiggle room where people don't fit nicely in one of these categories. Some people are also good at some things and not at others. There are also people that have all the skills, but just don't have experience yet. Everyone needs to make mistakes and learn from them, and that just takes some time.

Rosstafarian:

Except that average programmers are not just a multiple better than bad programmers. Somewhere just south of average, poor programmers contribute less than the increased communication overhead needed to include them on the team. A little below that, you have really poor programmers whose typical coding change makes the system worse and either requires time by alert developers to fix the problems they create or dramatically increases the risk of failure of the effort if it's not detected in time.

In my experience, the best programmers routinely achieve goals that average programmers don't understand even when the result is later explained to them. In terms of pure productivity, the difference is something like 10-15x between the best and the simply good. I firmly believe that there is a transition to negative value that occurs well into "average" territory but which is only apparent to a few enlightened members of management.

Gabe da Silveira:

You're making the same mistake though. It is some combination of talent and experience that makes one great at anything. There's absolutely no reason to believe that great programmers were somehow better than average from the outset they could have had breakthroughs throughout their journey. Likewise the most promising candidate could get lazy in a cush job or maybe lose interest in advancing their skills.

Ulf Wiger:

@Alfred: In my experience, software companies (esp larger ones) need many different kinds of programmers. The trick is to find your niche and figure out how you can best contribute.

The software industry as a whole needs inventors, finishers, motivators, maintainers, and also project managers and line managers who are (or at least have been) skilled enough at programming. Some people love working for years maintaining and improving a particular product; others wouldn't be caught dead doing that, but want to innovate, prove a concept, and then move on.

The trick for every company is to find the right mix, and dependable programmers who are Good Enough are extremely important. I have plenty of good war stories about brilliant programmers, but they are best told over beer

Matt J.

When I see that much enthusiastic agreement, it raises my suspicions. The majority is rarely right.

And sure enough, when I look more closely at this, I see lots of problems covered up with sententious authority. I am glad to see that doctor doom caught one of them, but there are more.

I will focus on only one, the point about it not being democratic. This is true, BUT: if you let only one person do all the design, you feed frustration on the rest of the team, and then what do you do if the one designer is hit by the proverbial bus? It is better to admit that the design process cannot be entirely democratic, but let the one designer bounce his ideas of other members of the team. This solves both problems by giving them a hand in the design also, and by distributing knowledge of the design among several people, so that if he is hit by a bus, he is replaceable without as much loss.

For the same reason, it is important that that one designer know how to share with the team. Pick someone who is bright, but autocratic, and you will ruin the team and the project.

Weinberger had an interesting correction to the article too, but he overestimates the PHB's command of the business world. The real problem of modern day business is that the PHB who is technically ignorant is nearly as ignorant of the business world, too! That is WHY Scott Adams has the recurring line about 'manager' really coming from an ancient word for "mastodon dung". That is WHY he reminds us of the managers so dumb, they didn't even know how to use voicemail. He didn't make that example up, either. It comes from real life.

Nor is it really that new. The reasons such gross incompetence is tolerated in the overpaid management class of society was covered very well by Thorstein Veblen in his "The Theory of the Leisure Class". If you really want to understand why managers are so destructive, why they will never admit that Fred Brooks was right, then read this book.

The book introduces a whole slew of concepts at the heart of our understanding of how we build software:

- How do disastrously late projects get that way? "One day at a time." (Brooks applied this phrase to the software field long before it became a 12-step self-help mantra.) Most slippage is the offspring of "termites, not tornados."

- Sequential constraints: Some projects take an irreducible amount of time because one part of the task needs to be finished before the next can be begun - or, as Brooks puts it, "The bearing of a child takes nine months, no matter how many women are assigned."

- Coding isn't what takes the most time: Brooks's rule of thumb is that actually writing the code takes 1/6 of a project's time - with planning taking another third and the remaining half devoted to testing and debugging.

- Programmers vary wildly in individual productivity: Wildly as in the highly productive programmer may well be able to produce ten times more useful stuff, however you choose to measure it, than his colleague.

- The goal of "conceptual integrity" or unity of design: The best programs and software systems exhibit a sense of consistency and elegance, as if they are shaped by a single creative mind, even when they are in fact the product of many. The fiendish problem is less how to create that sense in the first place than in how to preserve it against all the other pressures that come to bear throughout the process of writing software.

- The "second system effect": Brooks noted that an engineer or team will often make all the compromises necessary to ship their first product, then founder on the second. Throughout project number one, they stored up pet features and extras that they couldn't squeeze into the original product, and told themselves, "We'll do it right next time." This "second system" is "the most dangerous a man ever designs."

Pretty much since I started working at ThoughtWorks 2 1/2 years ago I've been told that this is a book I have to read and I've finally got around to doing so.Maybe it's not that surprising but my overriding thought about the book is that just about every mistake that we make in software development today is covered in this book!

What did I learn?

- The title of the book and the second chapter of the book refers to the situation that surely everyone who has ever worked on a software development project is aware of if a project is late then adding new people onto it will make it even later. This is due to the fact that a big part of software development is communication and adding people makes that communication more complicated than it previously was, therefore meaning it takes longer to get things done. My colleague Francisco has a nice post describing the ways that adding people can slow down a development team. The idea that a baby can't be produced any quicker by having 9 women rather than just one is a particularly common metaphor used to explain this.

- Incompleteness and inconsistencies of ideas only becomes clear during implementation pretty much putting a dagger into the idea that we can define everything up front and then code it just like that. This is certainly the area that the agile and lean approaches look to change and certainly the earlier we can try out different ideas by using approaches such as set based concurrent engineering the more quickly we can end up with a useful solution.

- An interesting idea about creating a surgical team ,with a few very experienced people doing the majority of the coding and being assisted by other members of the team, is suggested as being a successful route to delivering software. It sounds quite different to the teams that I have worked on where everyone on the team is involved although the objectives behind it seem valid reducing the communication points and ensuring the conceptual integrity of the solution. Uncle Bob recently wrote about this describing these teams as master craftsman teams but it sounds as if this would require quite a radical shift in the recruiting strategies of organisations. Dave Hoover also has an interesting post on this subject but he takes the angle of building apprentices on teams like this.

- This seems closely linked to another idea about team composition described later on in the book which speaks of the need for a team to have a technical director and a producer the technical director sounds to be quite similar to Toyota's idea of the Chief Engineer and they would be technically in charge while the producer (Iteration Manager?) is in charge of everything else. The underlying idea here is that we don't just have one person in charge of a team, there are two distinct and important roles.

- Brooks says the most important aspect of the design of a system is to ensure its conceptual integrity i.e. a consistent set of design ideas. In order to achieve this Brooks suggests the need for a system architect while I agree with this idea I think it is more a role and maybe one that can be done by the Tech Lead on a project. The Poppendieck's also talk of the need for conceptual integrity in Lean Software Development. The point here is to create a system which is easy to use both in terms of function to conceptual complexity. I am reminded of a Dan North quote at this stage: "We're done not when there's nothing more to add, but when there's nothing more to take away"

- The productivity increases gained by using high level languages are mentioned the underlying idea being that using these allow us to avoid an entire level of exposure to error. I think this makes sense and as an example I think the introduction of functional collection parameters into C# 3.0 will lead to a reduction in the amount of time spent debugging loop constructs since we no longer have to use these so frequently.

- When talking about object oriented programming Brooks speaks of the need to design objects which describe the concepts of the client.

If we design large grained classes that address concepts our clients are already working with, they can understand and question the design as it grows, and they can cooperation in the design of test cases.

In other words Domain Driven Design! Reading this part of the book very much reminded me of Phil Will's QCon presentation where he spoke of the way that the business and software development teams at the Guardian were able to collaborate to drive the design of the domain model for their new website.

- The idea of only performing system debugging when each individual component actually works is something which should be obvious but is often not followed. If we know a component doesn't work on its own then we can guarantee it is not going to work when we try to integrate it with other components so the exercise seems slightly pointless to me. Common sense advice I think!

- Speaking of code reuse Brooks points out that the key here is the perceived cost of finding a component to reuse that is important this ties in nicely with an idea from Dan Bergh Johnsson's QCon presentation

Your API has 10-30 seconds to direct a programmer to the right spot before they implement it [the functionality] themselves

- Brooks talks of the need to have documentation for our projects he uses a project workbook to do this and I think the modern day equivalent would be the project wiki. The idea of creating self documenting programs to help minimise the documentation that needs to be written is also covered. The importance of how we name concepts in our code is especially important in this area.

- The need to progressively refine the system by growing it rather than building it is suggested later on in the book the limitations of the waterfall model are described and the approaches of agile/lean are pretty much described building frequently, getting it working end to end, rapid prototyping and so on.

In Summary

I really enjoyed reading this book and seeing how a lot of the ideas in more modern methodologies were already known about in the 1980s and aren't in essence new ideas.

I'd certainly recommend this book.

Dec 14, 2006 (InfoWorld) Joel Spolsky is one of our most celebrated pundits on the practice of software development, and he's full of terrific insight. In a recent blog post, he decries the fallacy of "Lego programming" -- the all-too-common assumption that sophisticated new tools will make writing applications as easy as snapping together children's toys. It simply isn't so, he says -- despite the fact that people have been claiming it for decades -- because the most important work in software development happens before a single line of code is written.

By way of support, Spolsky reminds us of a quote from the most celebrated pundit of an earlier generation of developers. In his 1987 essay "No Silver Bullet," Frederick P. Brooks wrote, "The essence of a software entity is a construct of interlocking concepts ... I believe the hard part of building software to be the specification, design, and testing of this conceptual construct, not the labor of representing it and testing the fidelity of the representation ... If this is true, building software will always be hard. There is inherently no silver bullet."

As Spolsky points out, in the 20 years since Brooks wrote "No Silver Bullet," countless products have reached the market heralded as the silver bullet for effortless software development. Similarly, in the 30 years since Brooks published " The Mythical Man-Month" -- in which, among other things, he debunks the fallacy that if one programmer can do a job in ten months, ten programmers can do the same job in one month -- product managers have continued to buy into various methodologies and tricks that claim to make running software projects as easy as stacking Lego bricks.

Don't you believe it. If, as Brooks wrote, the hard part of software development is the initial design, then no amount of radical workflows or agile development methods will get a struggling project out the door, any more than the latest GUI rapid-development toolkit will.