|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

| News | Recommended Links | Recommended Papers | Syslog MultiTail | Log Colorizers | |

| logtail | Logrep | loghound | LogSurfer | Humor | Etc |

|

|

There are many different types of log that are produced by modern OSes and applications. That's why Log analyzers are usually pretty specialized (e.g. web log analyzers, syslog analyzers, etc).

|

|

The main categories of viewers are as following:

Dr. Nikolai Bezroukov

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

Oct 02, 2018 | opensource.com

Quiet log noise with Python and machine learning Logreduce saves debugging time by picking out anomalies from mountains of log data. 28 Sep 2018 Tristan de Cacqueray (Red Hat) Feed 9 up

Image by : Internet Archive Book Images . Modified by Opensource.com. CC BY-SA 4.0 x Get the newsletter

Join the 85,000 open source advocates who receive our giveaway alerts and article roundups.

https://opensource.com/eloqua-embedded-email-capture-block.html?offer_id=70160000000QzXNAA0

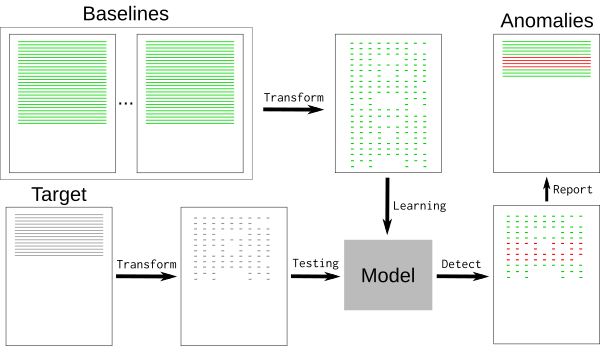

Logreduce machine learning model is trained using previous successful job runs to extract anomalies from failed runs' logs.This principle can also be applied to other use cases, for example, extracting anomalies from Journald or other systemwide regular log files.

Using machine learning to reduce noiseA typical log file contains many nominal events ("baselines") along with a few exceptions that are relevant to the developer. Baselines may contain random elements such as timestamps or unique identifiers that are difficult to detect and remove. To remove the baseline events, we can use a k -nearest neighbors pattern recognition algorithm ( k -NN).

ml-generic-workflow.png

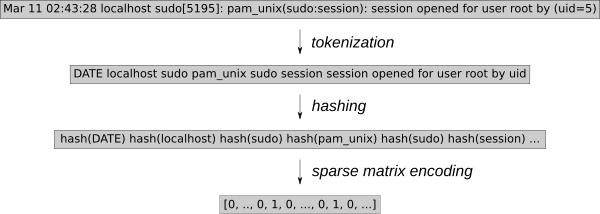

Log events must be converted to numeric values for k -NN regression. Using the generic feature extraction tool HashingVectorizer enables the process to be applied to any type of log. It hashes each word and encodes each event in a sparse matrix. To further reduce the search space, tokenization removes known random words, such as dates or IP addresses.

hashing-vectorizer.png

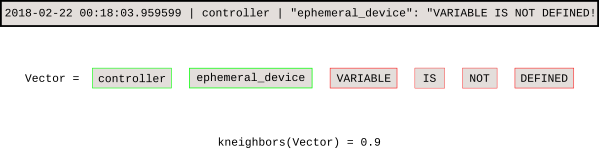

Once the model is trained, the k -NN search tells us the distance of each new event from the baseline.

kneighbors.png

This Jupyter notebook demonstrates the process and graphs the sparse matrix vectors.

anomaly-detection-with-scikit-learn.pngIntroducing Logreduce

The Logreduce Python software transparently implements this process. Logreduce's initial goal was to assist with Zuul CI job failure analyses using the build database, and it is now integrated into the Software Factory development forge's job logs process.

At its simplest, Logreduce compares files or directories and removes lines that are similar. Logreduce builds a model for each source file and outputs any of the target's lines whose distances are above a defined threshold by using the following syntax: distance | filename:line-number: line-content .

$ logreduce diff / var / log / audit / audit.log.1 / var / log / audit / audit.log

INFO logreduce.Classifier - Training took 21.982s at 0.364MB / s ( 1.314kl / s ) ( 8.000 MB - 28.884 kilo-lines )

0.244 | audit.log: 19963 : type =USER_AUTH acct = "root" exe = "/usr/bin/su" hostname =managesf.sftests.com

INFO logreduce.Classifier - Testing took 18.297s at 0.306MB / s ( 1.094kl / s ) ( 5.607 MB - 20.015 kilo-lines )

99.99 % reduction ( from 20015 lines to 1A more advanced Logreduce use can train a model offline to be reused. Many variants of the baselines can be used to fit the k -NN search tree.

$ logreduce dir-train audit.clf / var / log / audit / audit.log. *

INFO logreduce.Classifier - Training took 80.883s at 0.396MB / s ( 1.397kl / s ) ( 32.001 MB - 112.977 kilo-lines )

DEBUG logreduce.Classifier - audit.clf: written

$ logreduce dir-run audit.clf / var / log / audit / audit.logLogreduce also implements interfaces to discover baselines for Journald time ranges (days/weeks/months) and Zuul CI job build histories. It can also generate HTML reports that group anomalies found in multiple files in a simple interface.

html-report.pngManaging baselines

The key to using k -NN regression for anomaly detection is to have a database of known good baselines, which the model uses to detect lines that deviate too far. This method relies on the baselines containing all nominal events, as anything that isn't found in the baseline will be reported as anomalous.

CI jobs are great targets for k -NN regression because the job outputs are often deterministic and previous runs can be automatically used as baselines. Logreduce features Zuul job roles that can be used as part of a failed job post task in order to issue a concise report (instead of the full job's logs). This principle can be applied to other cases, as long as baselines can be constructed in advance. For example, a nominal system's SoS report can be used to find issues in a defective deployment.

baselines.pngAnomaly classification service

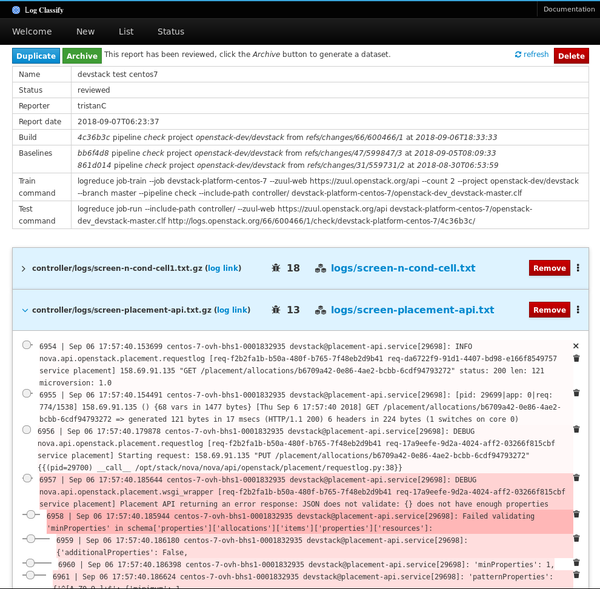

The next version of Logreduce introduces a server mode to offload log processing to an external service where reports can be further analyzed. It also supports importing existing reports and requests to analyze a Zuul build. The services run analyses asynchronously and feature a web interface to adjust scores and remove false positives.

classification-interface.png

Reviewed reports can be archived as a standalone dataset with the target log files and the scores for anomalous lines recorded in a flat JSON file.

Project roadmapLogreduce is already being used effectively, but there are many opportunities for improving the tool. Plans for the future include:

- Curating many annotated anomalies found in log files and producing a public domain dataset to enable further research. Anomaly detection in log files is a challenging topic, and having a common dataset to test new models would help identify new solutions.

- Reusing the annotated anomalies with the model to refine the distances reported. For example, when users mark lines as false positives by setting their distance to zero, the model could reduce the score of those lines in future reports.

- Fingerprinting archived anomalies to detect when a new report contains an already known anomaly. Thus, instead of reporting the anomaly's content, the service could notify the user that the job hit a known issue. When the issue is fixed, the service could automatically restart the job.

- Supporting more baseline discovery interfaces for targets such as SOS reports, Jenkins builds, Travis CI, and more.

If you are interested in getting involved in this project, please contact us on the #log-classify Freenode IRC channel. Feedback is always appreciated!

Tristan Cacqueray will present Reduce your log noise using machine learning at the OpenStack Summit , November 13-15 in Berlin. Topics Python OpenStack Summit AI and machine learning Programming SysAdmin About the author

Tristan de Cacqueray - OpenStack Vulnerability Management Team (VMT) member working at Red Hat.

Oct 31, 2017 | www.tecmint.com

How can I see the content of a log file in real time in Linux? Well there are a lot of utilities out there that can help a user to output the content of a file while the file is changing or continuously updating. Some of the most known and heavily used utility to display a file content in real time in Linux is the tail command (manage files effectively).Read Also : 4 Good Open Source Log Monitoring and Management Tools for Linux

1. tail Command – Monitor Logs in Real TimeAs said, tail command is the most common solution to display a log file in real time. However, the command to display the file has two versions, as illustrated in the below examples.

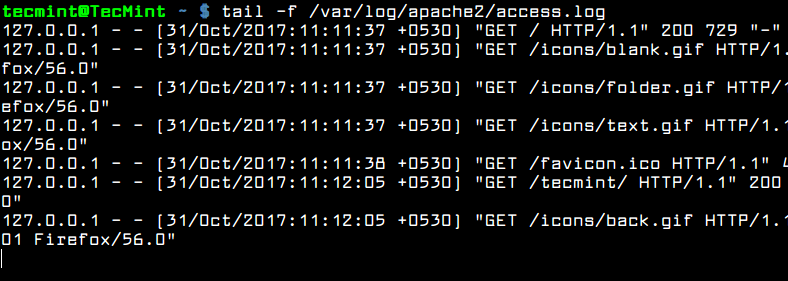

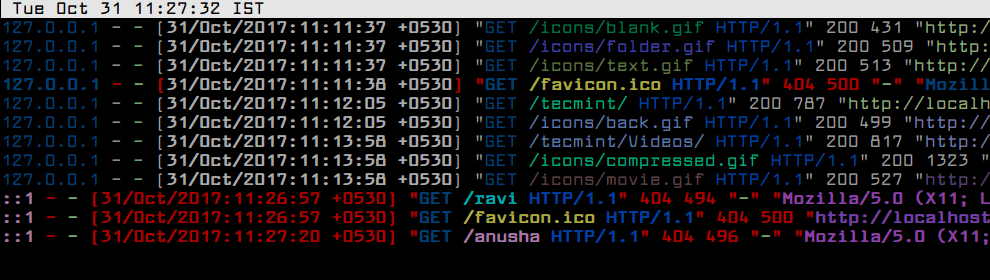

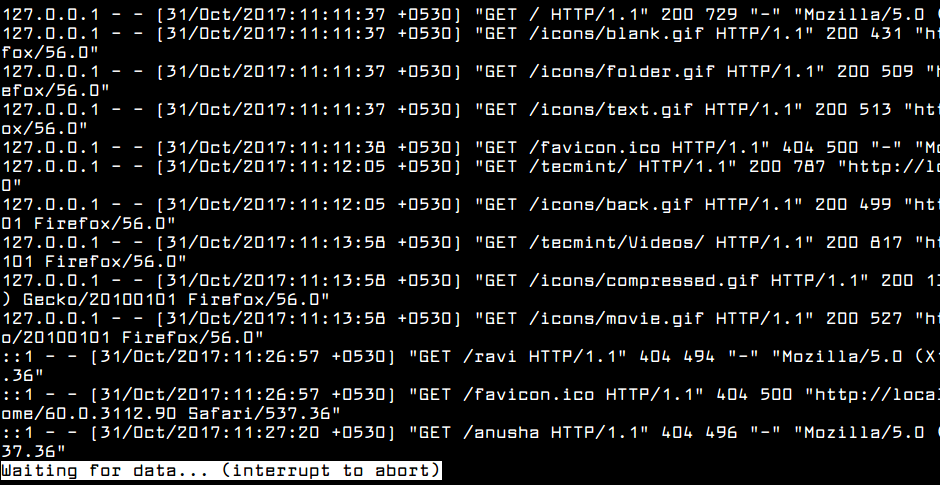

In the first example the command tail needs the

-fargument to follow the content of a file.$ sudo tail -f /var/log/apache2/access.log

Monitor Apache Logs in Real Time

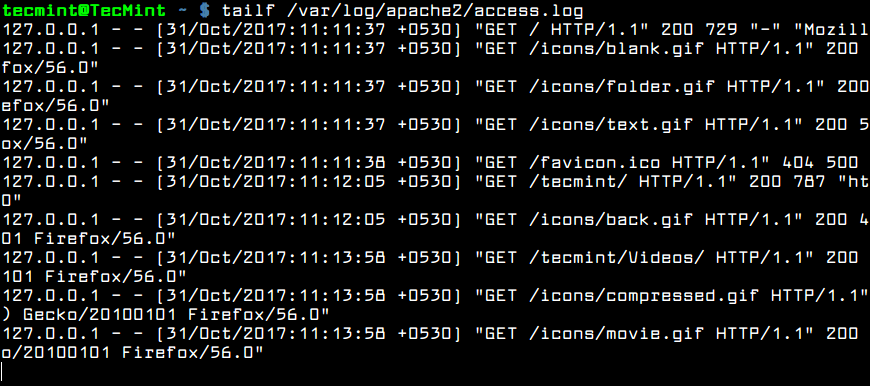

The second version of the command is actually a command itself: tailf . You won't need to use the

-fswitch because the command is built-in with the-fargument.$ sudo tailf /var/log/apache2/access.log

Real Time Apache Logs Monitoring

Usually, the log files are rotated frequently on a Linux server by the logrotate utility. To watch log files that get rotated on a daily base you can use the

-Fflag to tail commandRead Also : How to Manage System Logs (Configure, Rotate and Import Into Database) in Linux

The

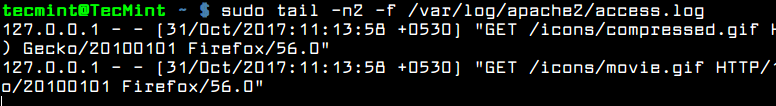

tail -Fwill keep track if new log file being created and will start following the new file instead of the old file.$ sudo tail -F /var/log/apache2/access.logHowever, by default, tail command will display the last 10 lines of a file. For instance, if you want to watch in real time only the last two lines of the log file, use the

-nfile combined with the-fflag, as shown in the below example.$ sudo tail -n2 -f /var/log/apache2/access.log

Watch Last Two Lines of Logs 2. Multitail Command – Monitor Multiple Log Files in Real Time

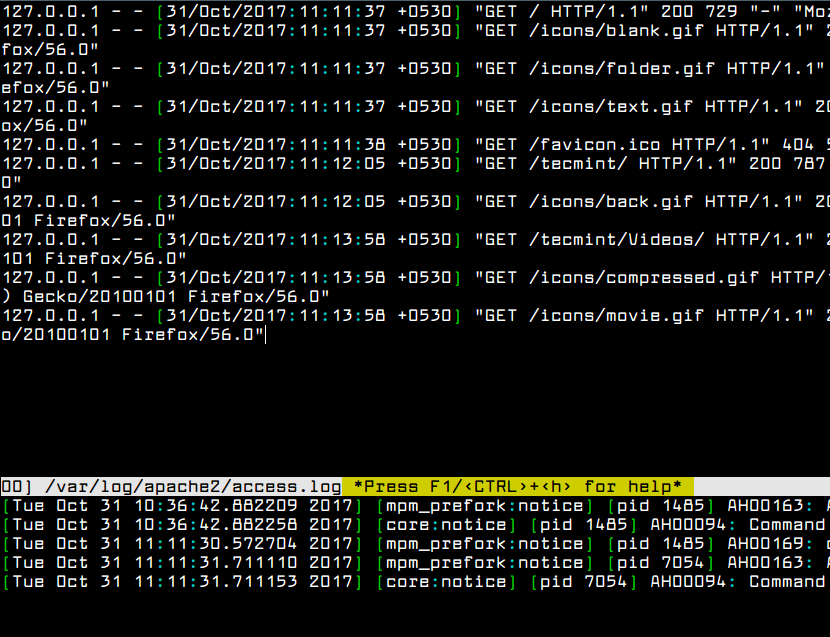

Another interesting command to display log files in real time is multitail command . The name of the command implies that multitail utility can monitor and keep track of multiple files in real time. Multitail also lets you navigate back and forth in the monitored file.

To install mulitail utility in Debian and RedHat based systems issue the below command.

$ sudo apt install multitail [On Debian & Ubuntu] $ sudo yum install multitail [On RedHat & CentOS] $ sudo dnf install multitail [On Fedora 22+ version]To display the output of two log file simultaneous, execute the command as shown in the below example.

$ sudo multitail /var/log/apache2/access.log /var/log/apache2/error.log

Multitail Monitor Logs 3. lnav Command – Monitor Multiple Log Files in Real Time

Another interesting command, similar to multitail command is the lnav command . Lnav utility can also watch and follow multiple files and display their content in real time.

To install lnav utility in Debian and RedHat based Linux distributions by issuing the below command.

$ sudo apt install lnav [On Debian & Ubuntu] $ sudo yum install lnav [On RedHat & CentOS] $ sudo dnf install lnav [On Fedora 22+ version]Watch the content of two log files simultaneously by issuing the command as shown in the below example.

$ sudo lnav /var/log/apache2/access.log /var/log/apache2/error.log

lnav – Real Time Logs Monitoring 4. less Command – Display Real Time Output of Log Files

Finally, you can display the live output of a file with less command if you type

Shift+F.As with tail utility , pressing

Shift+Fin a opened file in less will start following the end of the file. Alternatively, you can also start less with less+Fflag to enter to live watching of the file.$ sudo less +F /var/log/apache2/access.log

Watch Logs Using Less Command

That's It! You may read these following articles on Log monitoring and management.

Mar 14, 2012 | sanctum.geek.nz

If you need to search a set of log files in

/var/log, some of which have been compressed withgzipas part of thelogrotateprocedure, it can be a pain to deflate them to check them for a specific string, particularly where you want to include the current log which isn't compressed:$ gzip -d log.1.gz log.2.gz log.3.gz $ grep pattern log log.1 log.2 log.3It turns out to be a little more elegant to use the

-cswitch forgzipto deflate the files in-place and write the content of the files to standard output, concatenating any uncompressed files you may also want to search in withcat:$ gzip -dc log.*.gz | cat - log | grep patternThis and similar operations with compressed files are common enough problems that short scripts in

/binon GNU/Linux systems exist, providing analogues to existing tools that can work with files in both a compressed and uncompressed state. In this case, thezgreptool is of the most use to us:$ zgrep pattern log*Note that this search will also include the uncompressed

logfile and search it normally. The tools are for possibly compressed files, which makes them particularly well-suited to searching and manipulating logs in mixed compression states. It's worth noting that most of these are actually reasonably simple shell scripts.The complete list of tools, most of which do the same thing as their z-less equivalents, can be gleaned with a quick

whatiscall:$ pwd /bin $ whatis z* zcat (1) - compress or expand files zcmp (1) - compare compressed files zdiff (1) - compare compressed files zegrep (1) - search possibly compressed files for a regular expression zfgrep (1) - search possibly compressed files for a regular expression zforce (1) - force a '.gz' extension on all gzip files zgrep (1) - search possibly compressed files for a regular expression zless (1) - file perusal filter for crt viewing of compressed text zmore (1) - file perusal filter for crt viewing of compressed text znew (1) - recompress .Z files to .gz files

January 13, 2010 | LWN.net

The nine-year-old syslog-ng project is a popular, alternative syslog daemon - licensed under GPLv2 - that has established its name with reliable message transfer and flexible message filtering and sorting capabilities. In that time it has gained many new features including the direct logging to SQL databases, TLS-encrypted message transport, and the ability to parse and modify the content of log messages. The SUSE and openSUSE distributions use syslog-ng as their default syslog daemon.

In syslog-ng 3.0 a new message-parsing and classifying feature (dubbed pattern database or patterndb) was introduced. With recent improvements in 3.1 and the increasing demand for processing and analyzing log messages, a look at the syslog-ng capabilities is warranted.

The main task of a central syslog-ng log server is to collect the messages sent by the clients and route the messages to their appropriate destinations depending on the information received in the header of the syslog message or within the log message itself. Using various filters, it is possible to build even complex, tree-like log routes. For example:

It is equally simple to modify the messages by using rewrite rules instead of filters if needed. Rewrite rules can do simple search-and-replace, but can also set a field of the message to a specific value: this comes handy when client does not properly format its log messages to comply with the syslog RFCs. (This is surprisingly common with routers and switches.) Version 3.1 of makes it possible to rewrite the structured data elements in messages that use the latest syslog message format (RFC5424).

Artificial ignorance

Classifying and identifying log messages has many uses. It can be useful for reporting and compliance, but can be also important from the security and system maintenance point of view. The syslog-ng pattern database is also advantageous if you are using the "artificial ignorance" log processing method, which was described by Marcus J. Ranum (MJR):

Artificial Ignorance - a process whereby you throw away the log entries you know aren't interesting. If there's anything left after you've thrown away the stuff you know isn't interesting, then the leftovers must be interesting.Artificial ignorance is a method to detect the anomalies in a working system. In log analysis, this means recognizing and ignoring the regular, common log messages that result from the normal operation of the system, and therefore are not too interesting. However, new messages that have not appeared in the logs before can signify important events, and should therefore be investigated.

The syslog-ng pattern database

The syslog-ng application can compare the contents of the received log messages to a set of predefined message patterns. That way, syslog-ng is able to identify the exact log message and assign a class to the message that describes the event that has triggered the log message. By default, syslog-ng uses the unknown, system, security, and violation classes, but this can be customized, and further tags can be also assigned to the identified messages.

The traditional approach to identify log messages is to use regular expressions (as the logcheck project does for example). The syslog-ng pattern database uses radix trees for this task, and that has the following important advantages:

- Classifying messages is fast, much faster than with methods based on regular expressions. The speed of processing a message is practically independent from the total number of patterns. What matters is the length of the message and the number of "similar" messages, as this affects the number of junctions in the radix tree.

- Regular-expression based methods become increasingly slower as the number of patterns increases. Radix trees scale very well, because only a relatively small number of simple comparisons must be performed to parse the messages.

- The syslog-ng message patterns are easy to write, understand, and maintain.

For example, compare the following:

A log message from an OpenSSH server:

Accepted password for joe from 10.50.0.247 port 42156 ssh2A regular expression that describes this log message and its variants:Accepted \ (gssapi(-with-mic|-keyex)?|rsa|dsa|password|publickey|keyboard-interactive/pam) \ for [^[:space:]]+ from [^[:space:]]+ port [0-9]+( (ssh|ssh2))?An equivalent pattern for the syslog-ng pattern database:Accepted @QSTRING:auth_method: @ for @QSTRING:username: @ from \ @QSTRING:client_addr: @ port @NUMBER:port:@ @QSTRING:protocol_version: @Obviously, log messages describing the same event can be different: they can contain data that varies from message to message, like usernames, IP addresses, timestamps, and so on. This is what makes parsing log messages with regular expressions so difficult. In syslog-ng, these parts of the messages can be covered with special fields called parsers, which are the constructs between '@' in the example. Such parsers process a specific type of data like a string (@STRING@), a number (@NUMBER@ or @FLOAT@), or IP address (@IPV4@, @IPV6@, or @IPVANY@). Also, parsers can be given a name and referenced in filters or as a macro in the names of log files or database tables.

It is also possible to parse the message until a specific ending character or string using the @ESTRING@ parser, or the text between two custom characters with the @QSTRING@ parser.

A syslog-ng pattern database is an XML file that stores patterns and various metadata about the patterns. The message patterns are sample messages that are used to identify the incoming messages; while metadata can include descriptions, custom tags, a message class - which is just a special type of tag - and name-value pairs (which are yet another type of tags).

The syslog-ng application has built-in macros for using the results of the classification: the .classifier.class macro contains the class assigned to the message (e.g., violation, security, or unknown) and the .classifier.rule_id macro contains the identifier of the message pattern that matched the message. It is also possible to filter on the tags assigned to a message. As with syslog, these routing rules are specified in the syslog-ng.conf file.

Using syslog-ng

In order to use these features, get syslog-ng 3.1 - older versions use an earlier and less complete database format. As most distributions still package version 2.x, you will probably have to download it from the syslog-ng download page.

The syntax of the pattern database file might seem a bit intimidating at first, but most of the elements are optional. Check The syslog-ng 3.1 Administrator Guide [PDF] and the sample database files to start with, and write to the mailing list if you run into problems.

A small utility called pdbtool is available in syslog-ng 3.1 to help the testing and management of pattern databases. It allows you to quickly check if a particular log message is recognized by the database, and also to merge the XML files into a single XML for syslog-ng. See pdbtool --help for details.

Closing remarks

The syslog-ng pattern database provides a powerful framework for classifying messages, but it is powerless without the message patterns that make it work. IT systems consist of several components running many applications, which means a lot of message patterns to create. This clearly calls for community effort to create a critical mass of patterns where all this becomes usable.

To start with, BalaBit - the developer of syslog-ng - has made a number of experimental pattern databases available. Currently, these files contain over 8000 patterns for over 200 applications and devices, including Apache, Postfix, Snort, and various common firewall appliances. The syslog-ng pattern databases are freely available for use under the terms of the Creative Commons Attribution-Noncommercial-Share Alike 3.0 (CC by-NC-SA) license.

A community site for sharing pattern databases is reportedly also under construction, but until this becomes a reality, pattern database related discussions and inquiries should go to the general syslog-ng mailing list.

Selected Comments

Maybe less is more?

eparis123

Sometimes I'm not very comfortable with this "central" sort of approaches, as they get over-complicated by time.

Maybe it's the fear of change, but I like the simple sparse list of configuration files under /etc rather than the GNOME gconf XML repository.

By the same token, while the list of files under /var/log are a bit chaotic comparing them to syslog-ng approach, they are much simpler, and simplicity is good.

The tool seems to shine for server farms though.

Re: Maybe less is more?

frobert:

You are absolutely right, using patterndb for a single host might be an overkill. It is mainly aimed at larger networks.

Artificial Stupidity ~= antilogging

davecb:

Oh cool, it was Marcus who thought of this approach! I wrote up the "antilog" variant of it in

"Sherlock Holmes on Log Files",http://datacenterworks.com/stories/antilog.html.

I'll drop him a line...

--dave

it would be nice to see a comparison with rsyslog

dlang:

as most distros are moving from traditional syslog to rsyslog the basline capabilities are climbing.

rsyslog doesn't have a pattern database, but it does have quite a bit of filtering and re-writing capability.

Data Center Works

It is impossible that the regular, routine and unexceptional activities are cause of the problem. If we remove the messages about routine activities from the logs, then whatever remains will be extraordinary, and so relevant to the problem we are trying to diagnose.

Fortunately, we can exclude routine log entries quite easily. We take all the old logs, up to but not including the day the problem occurred, remove the date-stamps, and use sort -u to create a table of log entries that we've seen previously. We then turn the table into an awk program to filter out these lines, and run that against the logs from the day the problem occurred.

For example, a machine named "froggy" had a problem with Sun's update program, and the /var/adm/messages contents for previous days, once sorted, were a series of lines like this:

froggy /sbin/dhcpagent[1007]: [ID 929444 daemon.warning] configure_if: no IP broadcast specified for dmfe0, making best guess froggy /sbin/dhcpagent[1029]: [ID 929444 daemon.warning] configure_if: no IP broadcast specified for dmfe0, making best guess froggy gconfd (root-776): [ID 702911 user.error] file gerror.c: line 225: assertion `src != NULL' failed froggy pcipsy: [ID 781074 kern.warning] WARNING: pcipsy0: spurious interrupt from ino 0xbA bit of editing turned this into

/dhcpagent.* configure_if: no IP broadcast specified/ { next; } /gconfd.* file gerror.c: line 225/ { next; } /pcipsy.* WARNING: pcipsy0: spurious interrupt from/ next; } /.*/ { print $0}That's an awk program which will remove all variants on these three error messages. When the program was extended to a mere 23 lines and run against the current day's /var/adm/messages, it exposed the following error:

froggy CNS Transport[1160]: [ID 195303 daemon.error] unhandled exception: buildSysidentInfo: IP address getSystemIPAddr error: node name or service name not knownA little checking showed us that this was the Sun Update Connection program complaining that there was not network available, which was perfectly reasonable, as the machine in question was a laptop and really wasn't connected to a network at the time the error occurred.

So we added a rule for updating-program errors, right after the one for "no IP broadcast specified", another message that only occurs when the machine was disconnected.

Turning This into a Process and a Tool

This kind of manual programming is fine for a on-off exercise, but it would be better to have a config file we can update occasionally, and run the filter program every night against every machine via cron. Then any new messages would be reported bright and early for us to look at, before the users discover it.

To make it easier, and to reduce the likelihood of making a typographical error creating the patterns, we wrote an awk program to translate a configuration file into proper awk patterns, and called it antilog. To give you an easy starting point, we put a default config file into the program itself, so you can just copy it out and add local additions.

In addition, we taught it a bit more about syslog log files, as they're very common, and added a few options for debugging and for emailing the results to an individual or list.

Finally, we invented a notation for multiple lines which begin with the same pattern:

daemon.error { Failed to monitor the power button. Unable to connect to open /dev/pm: pm-actions disabled }This will create two awk commands of the form

daemon.error.*Failed to monitor the power button. daemon.error.*Unable to connect to open /dev/pm: pm-actions disabledto save typing (and typos).

Our final config file looks like this:

# Boot time last message repeated Use is subject to license terms. auth.crit { rebooted by root } # Power management daemon.error { Failed to monitor the power button. Unable to connect to open /dev/pm: pm-actions disabled } # sendfail(8) blunders mail.alert] unable to qualify my own domain name mail.crit] My unqualified host name # Shutdown syslogd: going down on signal 15 daemon.error] rpcbind terminating on signal. # DHCP daemon.warning] configure_if: no IP broadcast specified # Networking NOTICE.*TX stall detected after: pass kern.warning] WARNING: pcipsy0: spurious interrupt from ino # Gconfd user.error] file gerror.c. line 225. assertion # Java user.error { libpkcs11: /usr/lib/security/pkcs11_softtoken.so unexpected failure libpkcs11: open /var/run/kcfd_door: No such file or directory } # And trim off the rest of the low-priority kernel messages. kern.notice kern.infoWhen run, Antilog compiles the program above into:

/\.debug\]/ { next } /kern.notice/ { next } /kern.info/ { next } /last message repeated/ { next } /Use is subject to license terms./ { next } /auth.crit.*rebooted by root/ { next } /daemon.error.*Failed to monitor the power button./ { next } /daemon.error.*Unable to connect to open \/dev\/pm: pm-actions disabled/ { next } /mail.alert\] unable to qualify my own domain name/ { next } /mail.crit\] My unqualified host name/ { next } /syslogd: going down on signal 15/ { next } /daemon.error\] rpcbind terminating on signal./ { next } /daemon.warning\] configure_if: no IP broadcast specified/ { next } /NOTICE.*TX stall detected after:/ { print $0; next } /kern.warning\] WARNING: pcipsy0: spurious interrupt from ino 0x2a/ { next } /user.error\] file gerror.c. line 225. assertion/ { next } /user.error.*libpkcs11: \/usr\/lib\/security\/pkcs11_softtoken.so/ { next } /user.error.*libpkcs11: open \/var\/run\/kcfd_door: No such file/ { next } /kern.notice/ { next } /kern.info/ { next } /.*/ { print $0 }We've used this same technique quite a number of times, for syslog files after an Oracle crash, warning messages from a compiler and log file from the X.org release of the X Window System, In each case it's turned hours of reading into a few minutes of preprocessing.

Then we can get down to the real detective work, finding out what we're seeing the particular problem.

Downloading

The production antilog program and its man page are available at http:www.datacenterworks.com/tools

freshmeat.net

The Logfile Navigator, lnav for short, is a curses-based tool for viewing and analyzing log files. The value added by lnav over text viewers or editors is that it takes advantage of any semantic information that can be gleaned from the log file, such as timestamps and log levels.

Using this extra semantic information, lnav can do things like interleaving messages from different files, generate histograms of messages over time, and provide hotkeys for navigating through the file. T

hese features are meant to allow the user to quickly and efficiently focus on problems.

Logsurfer is a program for monitoring system logs in real-time, and reporting on the occurrence of events. It is similar to the well-known swatch program on which it is based, but offers a number of advanced features which swatch does not support.Logsurfer is capable of grouping related log entries together - for instance, when a system boots it usually creates a high number of log messages. In this case, logsurfer can be setup to group boot-time messages together and forward them in a single Email message to the system administrator under the subject line "Host xxx has just booted". Swatch just couldn't do this properly.

Logsurfer is written in C - this makes it extremely efficient, an important factor when sites generate a high amount of log traffic. I have used logsurfer at a site where a logging server was recording more than 500,000 events per day - and Logsurfer had no trouble keeping up with this load. Swatch, on the other hand, is based on perl and runs into trouble even when dealing with a much smaller rate of log traffic.

Logsurfer+ Features

Logsurfer+ is a branched version of the standard Logsurfer package from DFN-CERT, it has been modified to add a few features to improve what can be done with it.Logsurfer+ 1.7 Features

Many of the features in this release are designed to allow Logsurfer to work as a log aggregator - to quickly and efficiently detect complex events and place summarization messages either into plain files or back into syslog.

- Added -e option to begin processing from the current end of the input log file ( normally used with -f )

- Put double-quotes around regex expressions in dump file

- If the context argument to a pipe or report action is "-" then the current context contents are piped into the command this should shorten most context definitions

- Added new action "echo" which simply echo's the output on stdout, or to a file with optional >file or >>file first argument. This is more efficient than invoking an external process for simple echo actions.

- Added a macro construct in context action fields, if "$lines" exists in a context action (such as a command line) it will be substituted by the number of lines in the context

- Added syslog action to send a message into syslog. The first argument to the action must be <facility>:<level>, the second argument is the string to send to syslog. Note that the log lines stored in a context are not forwarded into syslog.

<decoder name="sshd-success">

<program_name>sshd</program_name>

<regex>^Accepted \S+ for (\S+) from (\S+) port </regex>

<order>user, srcip</order>

</decoder>

check_logfiles 2.3.3 (Default)

Added: Sun, Mar 12th 2006 15:09 PDT (2 years, 1 month ago)

Updated: Tue, May 6th 2008 10:37 PDT (today)

About:check_logfiles is a plugin for Nagios which checks logfiles for defined patterns. It is capable of detecting logfile rotation. If you tell it how the rotated archives look, it will also examine these files. Unlike check_logfiles, traditional logfile plugins were not aware of the gap which could occur, so under some circumstances they ignored what had happened between their checks. A configuration file is used to specify where to search, what to search, and what to do if a matching line is found.

Logrep is a secure multi-platform framework for the collection, extraction, and presentation of information from various log files. It features HTML reports, multi dimensional analysis, overview pages, SSH communication, and graphs, and supports 18 popular systems including Snort, Squid, Postfix, Apache, Sendmail, syslog, ipchains, iptables, NT event logs, Firewall-1, wtmp, xferlog, Oracle listener and Pix.

- Changelog:

- Download: http://www.l0t3k.net/tools/Loganalysis/LogrepSource-1.4.2.tar.gz

- License: GNU General Public License

- Platform(s): Windows NT/2000, Linux

Kazimir is a log analyzer. It has a complete configuration file used to describe what kind of logs (or non-regression test) to be watched or spawned and the kind of regexp to be found in them. Interesting information found in logs may be associated with "events" in a boolean and chronological way. The occurrence of events may be associated with the execution of commands.Release focus: Initial freshmeat announcement

Cisco IP Accounting Fetcher is a set of Perl scripts that allows you to fetch IP accounting data from Cisco routers. It is capable of fetching this information from multiple routers. It summarizes this information on a daily and monthly basis. It optionally generates HTML output with CSS support, and it is able to ignore specific traffic.

Perl +Python. Looks dead as of Jan 2010.

Nov 2005

Epylog is a log notifier and parser that periodically tails system logs on Unix systems, parses the output in order to present it in an easily readable format (parsing modules currently exist only for Linux), and mails the final report to the administrator. It can run daily or hourly. Epylog is written specifically for large clusters where many systems log to a single loghost using syslog or syslog-ng.

Author:

Konstantin Ryabitsev [contact developer]

About:

devialog is a behavior/anomaly/signature-based syslog intrusion detection system which can detect new, unknown attacks. It fits comfortably in a heterogeneous Unix/Linux/*BSD environment at the core of a central syslog server.devialog generates its own signatures and acts upon anomalies as configured by the system administrator.

In addition, devialog can function as a traditional syslog parsing utility in which known signatures trigger actions.

Release focus: Minor bugfixes

Changes:

Bug fixes include better handling of lines with some special characters. A timing error was fixed within alert generation: sometimes alerts would be sent inadvertently based on the timing of a new log arriving as an alert was sent out in specific high-volume log situations. Altered signature generation creates more exact regular expressions.

Net::Dev::Tools::Syslog version 0.8.0

=======================================DESCRIPTION

This module provides functionality to:

- parse syslog log files, apply filters

- send syslog message to syslog server

- listen for syslog messages on localhost

- forward received messages to other syslog serverINSTALLATION

To install this module type the following:

perl Makefile.PL

make

make test

make installDEPENDENCIES

This module requires these other modules and libraries:

Time::Local

IO::Socket

Sys::Hostname

Too complex to be useful and does nto provide additional funcionality for those who already know scripting languages.

Log file search and indexing software vendor Splunk Inc. announced Tuesday that it will soon add systems management host, network and service monitoring capabilities to its software through a partnership with the Nagios open-source project.The new capabilities, which will be added to Splunk's log file search and indexing applications over the next six months, will give systems administrators even more information to monitor and repair their networks, said Patrick J. McGovern III, chief community Splunker at San Francisco-based Splunk. Splunk has seen more than 25,000 downloads of its software since its 1.0 release came out in November, he said, while Nagios delivers about 20,000 downloads a month of its open-source network and service monitoring application.

The two working together is going to be a big win for both communities,Ф McGovern said.

McGovern is the son of Patrick J. McGovern, the founder and chairman of International Data Group Inc., which publishes Computerworld.

Splunk's free Splunk Server application runs on Linux, Solaris or BSD operating systems but is limited to searching and indexing 500MB of system log files each day. The full version, Splunk Professional Server, starts at $2,500 and goes up to $37,500 for an unlimited capacity. Both products search and index in real time the log files of mail servers, Web servers, J2EE servers, configuration files, message queues and database transactions from any system, application or device. Splunk uses algorithms to automatically organize any type of IT data into events without source-specific parsing or mapping. After the events are classified and any relationships between them discovered, the data is indexed by time, terms and relationships.

The integration of the Splunk applications and the Nagios features will take place in phases over the next six months, McGovern said.

The Nagios software allows monitoring of network services, including SMTP, POP3, HTTP, NNTP and PING, as well as monitoring of processor load, disk and memory usage, and running processes. Users can also monitor environmental factors in data centers such as temperature and humidity.

Other log file monitoring and management applications are available from competitors including Opalis Software Inc. and Prism Microsystems Inc.

Dana Gardner, an analyst at Interarbor Solutions LLC in Gilford, N.H., said Splunk's log file search capabilities bring a novel approach to IT management. Certainly on the theory basis, on the vision basis, it makes a lot of sense, Gardner said. Search can be a really useful tool to help systems administrators figure out a vexing problem amid a huge quantity of data.

It certainly gets at the heart of what keeps IT administrators up at night, managing complexity and chaos,Ф Gardner said.

SysLog & Event Log Management, Analysis & ReportingManageEngine EventLog Analyzer is a web-based, agent-less syslog and event log management solution that collects, archives, and reports on event logs from distributed Windows host and, syslogs from UNIX hosts, Routers and Switches. It helps organizations meet host-based security event management (SEM) objectives and adhere to demands of regulatory compliance requirements like HIPAA, SOX, and GLBA .

Sawmill is a world-class web log analyzer, but it's more than just that. Sawmill can analyze all of your log files, from all of your servers. Sawmill can analyze

- Streaming media logs: RealPlayer, Microsoft Media, and Quicktime Streaming Server.

- FTP logs from most FTP servers.

- Proxy logs from most proxy servers.

- Firewall logs from most firewalls.

- Cache logs from most cache servers.

- Network logs: Cisco PIX, IOS; tcpdump, and more.

- Mail logs: SMTP, POP, IMAP from most mail servers.

- And dozens of others!

Sawmill is a universal log analysis tool; it's not limited to web logs. You can use it to track usage statistics on your proxy and cache servers. You can use it to bill by bandwidth. You can use it to monitor all of the TCP/IP traffic on your internal network, or through your firewall, to see who's eating all that expensive bandwidth you just bought. As an ISP/ASP, you'll find dozens of uses for Sawmill beyond just providing your customers with the best statistics.

And that's not all! Sawmill lets you define your own log format, using a very powerful format description mechanism that can be used to describe just about any possible log format. Do you have your own special logs that you'd like to analyze? Have you written a custom server that no other tool knows anything about? In a few minutes, you can have Sawmill processing those files just as easily as it processes web logs (in fact, we'll happily write the log format descriptor file, and include it with the next version of Sawmill). All the advantages it has in web statistics, it has with all other logs -- you'll get unprecedented detail and power for your statistics, no matter where the log files came from.

"If you go into a room full of IT managers and ask how many are working on home-grown log solutions, half the room will raise their hands," said Stephen Northcutt, director of training and certification for the Bethesda, Md.-based institute. "Why is that bad? Because the guy who writes it leaves and doesn't document what he did or leave instructions behind. Then the person who takes over can't figure out how to interpret the logs or what to do if there's a problem."

Security experts have long advised that a clear audit trail is necessary to track suspicious network activity and quickly respond to security incidents. Northcutt agreed, and said companies that decide to take it seriously should "buy a commercial tool and pray that it works" or "get help from a MSSP."

Window dressing for compliance's sake

As part of the research, SANS polled 1,067 security-minded system administrators from a variety of industries. "Slightly over one fourth of the respondents stated that they retained logs for over one year. Almost half of the respondents [44%] don't keep logs more than a month," the report said. "Since many regulatory and accounting bodies are recommending or even requiring log retention of three to seven years, why do so many companies have such short retention times?"Those who answered the question cited three key problems: the amount of data to manage, the speed the log data comes in and the lack of a consistent format for the log data. "Closely related to all of these is a lack of manpower," the report said. "It takes people to maintain a logging system and more people to monitor it and, of course, man hours relates to money."

"That's not far off target," said Diane McQueen, systems engineer for Perot Systems, which manages IT security for the nonprofit Northern Arizona Healthcare hospital chain. "With the amount of paperwork auditing produces, a big problem is taking the time to look through those logs. It's a resource issue."

The report said many companies do nothing with their logs. At best, they look through them after an incident as they scramble to find the source of a problem. Another downside is that companies are often so zealous to satisfy the regulatory letter of such laws as HIPAA, Sarbanes-Oxley and Graham-Leach-Bliley that they hobble together half-baked logging systems.

The more diverse your environment, the more you need outside help.

Stephen Northcutt

director of training and certification, SANS Institute"For the smaller guys, it can be cheaper to pay the fine than pay for everything needed for full compliance," Northcutt said. "There are those who do window dressing to appear to be in compliance, but they're not really using their tools. They're not taking this seriously day to day."

The big picture may be worse than the survey suggests, said Adam Nunn, security and corporate compliance manager for a large U.S. healthcare organization. Nunn said his organization takes log management very seriously and that efforts are underway to further improve the system. But, he added, "Most of the smaller health care providers I am familiar with are seriously lacking logging capability" or they don't really review the logs they have.

Federal requirements boosting awareness

At the same time, the need to be in compliance with laws like HIPAA and Sarbanes-Oxley has helped IT managers understand the need to take log management more seriously."As computers become more numerous and regulation compliance becomes more a part of daily life, some system administrators are finding that log management is becoming a problem," the report said. "The scripts and manual processes that have historically been used by 80% of the market need to be upgraded. This has resulted in a relatively new log management industry. Log issues tend to snowball as the size of a company grows."

In a recent SearchSecurity.com report on organizations struggling with HIPAA's security rules, IT managers said regulatory demands had prompted them to improve their logging systems and invest in new tools.

"I have become a big advocate of the phrase 'trust but verify,'" Nunn said. "We must use the logging mechanism as a primary way to prevent unauthorized activity and enforce compliance of insiders and be able to track where our information is going and who accessed it."

Related information Why audit trails are critical to HIPAA complianceAre you using security technology effectively?

While he stressed the need for some companies to buy commercial tools or outsource their log keeping, Northcutt said the in-house programs are not always a bad thing.

"A locally-developed software solution isn't wrong per se. But if you go for the home-grown solution, your chances of success are better if you're an all-Windows or all-Unix shop," he said. "If you mix your operating systems, you're going to run into trouble. The more diverse your environment, the more you need outside help."

McQueen's advice to IT managers struggling with log management is this: "Set up your standards and adopt a tool that will alert you to any changes on the network," she said. "For example, if a new user comes on, the tool should alert you to its presence. That way, you don't have to spend time scanning the user directories every day to keep track of new users or other changes."

IPFC is a software and framework to manage and monitor multiple types of security modules across a global network. Security modules can be as diverse as packet filters (like netfilter, pf, ipfw, IP Filter, checkpoint FW1...), NIDS (Snort, ISS RealSecure...), webservers (from IIS to Apache) and other general devices (from servers to embedded devices).

Another way to explain IPFC : It's a complete generic Managed Security Services (MSS) software infrastructure. A graphical overview of the framework (to have a global overview of the IPFC software and how it's working).

The main features are :

Centralized and unified logging of multiple devices (from server to firewall including special device) Dynamic correlation of logs Alarming Active evaluation of your security infrastructure Unified policy and configuration management Can be integrated into existing monitoring infrastructure Auditable source code available (under GNU General Public License) Scalability and Security of the framework Easy extendable

again, i'm cross posting to the log analysis mailing list cos, well, it's my list ;-)

On Mon, 3 Nov 2003, Eric Johanson wrote:

> Sorry for the IDS rant. I'm sure ya'll have heard it before. My only

> real exsposure to IDS is via snort.oh, you know us shmoo, we have absolutely no tolerance for rants :-)

> It works on 'blacklists' of known bad data, or stuff that 'looks bad'.

> This doesn't really help when dealing with new types of attacks. Most

> can't deal with a new strean of outlook worm, or can tell the difference

> between a (new, never seen) rpc exploit, or a simple SYN/SYNACK.this is true...ish. f'r one thing, lots and lots o' exploits are based on known vulnerabilities, where there's enough information disclosed prior to release of exploit that reliable IDS signatures exist. nimda's my fave example of this. especially for its server attacks, nimda exploited vulnerabilities that were anywhere from 4 months to 2 years old. all the IDS vendors had signatures, which is why nimda was discovered so quickly and so rampantly. in the limited dataset i had -- counterpane customers with network-based intrusion detection systems watching their web farms -- the message counts increased by an order of 100000 within half an hour of the first "public" announcement of nimda (a posting at 9:18 east coast time on 18 sept 2001, on the incidents at securityfocus.com mailing list) (not that i was scarred or anything).

so in that particular instance, signature based IDS was certainly highly effective in letting you know that something was up.

several of the signature based IDS vendors claim that they base their signatures on the vulns rather than on a given exploit -- i don't really know what that means, but i do know that several of them detected this summer's RPC exploits, based on the info in the microsoft announcement, and the behavior of the vulnerable code when tickled, as opposed to waiting to write a signature after an attack had been discovered. we got attack data from ISS far faster than could have been explained if they were strictly writing in response to new attacks.

> I suspect we'll start seeing products in a few years that are focused on

> 'whitelisting' traffic. Anything that doesn't match this pattern, kill

> it.

>

> While this is a pain with lots-o-crappy protocols, it is possible. Does

> anyone know of a product that functions this way?

>

there are a couple of IDS vendors that claim to do this -- they tend to call themselves anomaly detection systems rather than intrusion detection systems -- and i've yet to be convinced about any of them. the only one i

can think of is lancope, but rodney probably knows better than i do. the statistical issues are huge, because you've got to be able to characterize normal traffic in real time with huge numbers of packets.it's more approachable IMNSHO for log data -- marcus ranum's "artificial ignorance" approach -- because you get far less data from a system or application log per actual event than you get numbers of packets. of course i'm not convinced that getting >less< data is an entirely good thing -- see below....

> The other big problem I see with IDS systems is the SNR. Most are soo

> noisy, that they have little real-world value.

>

> I think doing real time reviewing of log entries would have a much better

> chance of returing useful information - - but again, should be based on

> whitelists of know good log entries, not grepping for segfaulting apaches.

>

well, segfaulting apache is actually an important thing to watch for, cos it shouldn't ever happen in a production environment -- right, ben?the HUGE MASSIVE **bleeping** PROBLEM with using log data for this is that general purpose applications and operating systems completely suck at detecting malicious or unusual behavior. where by "completely suck," i mean:

-- take default installs of solaris, winNT, win2000, cisco IOS, linux

-- get your favorite copy of "armoring solaris," "armoring windows" blah

-- do the exact >>opposite<< of what the docs say -- that is, make the

target systems as wide open and vulnerable as possible-- take your favorite vulnerability auditing tool, or hacker toolbox, and

set it to "nuclear apocalypse" level (that is, don't grab banners, run the

damn exploits)-- turn the logging on the boxes to 11

---> the best you'll see is 15% of the total number of attacks, AND what shows up in the logs is invariably >not< the attack that actually rooted your box. as ben clarified for me a lot when i saw him last month, a root compromise that succeeds generally succeeds before the poor exploited daemon has a chance to write anything in the logs. what you see in the logs are traces of what >didn't< work, on the way to getting a memory offset or a phase of the moon just right and getting a rootshell.

[this number based on a summer's worth of lab work i did at counterpane;

needs to be repeated with more aggressive hacker tools and rather more

tweaking of system logging...]> Any comments?

>

> As for Rodney's comment: For Network based ISDs, I think we need to not

> look at attacks, but look at abnormal traffic. EG: my web server should

> not do dns lookups. My mail server should not be ftping out. Nobody

> should be sshing/tsing into the DB server in the DMZ. My server should

> only send SYNACKs on port X,Y and Z.

>

> I'm pretty sure that snort could be made to do this - - but it doesn't do

> this today without some major rule foo.

well, anywhere you can build your network to have a really limited set of rules, this approach is easy. hell, don't want my web server doing name resolution or web >browsing< -- so run ipchains or iptables or pf and block everything but the allowed traffic and get the logs and alerts as a fringe benefit.doing it for the content of web traffic is a little trickier but still completely plausible because web logs are relatively well structured. for some really vague definition of "relatively."

doing it for a general purpose windows server or a seriously multi-user unix environment is a lot harder, cos the range of acceptible behavior is so much larger and harder to parametrize. but hey, that's >>exactly<< what the log analysis list (and web site) are working on...

and is also why i am continually pestering my friends in the open source development community to give me logging that is more likely to catch things like client sessions that never terminate properly (to catch

successful buffer overflows) (give me a log when a session opens and >then< when it closes)...and things like significant administrative events (like sig updates in snort, or config changes on my firewall).had a fascinating talk with microsoft today about getting them to modify their patch installer (the thing that windows update >runs<, not windows update itself) so that in addition to creating a registry key for a patch

when it >begins< an install, it also generates a checksum for the new files it's installed when it >finishes<, and then put that into the event log where i can centralize it and monitor it and have a higher level of

confidence that the damn patch succeeded... not that i've been thinking about this stuff a lot lately or anything.tbird

This is my attempt of compiling a 'top list' of audit trails that are being left after intrusions where the intruders try to cover their tracks but don't do a good job. To put it short, there are actually a lot of audit trails on a normal UNIX system, which can almost all be overcome, but with some effort, that most intruders evade.

"The syslog daemon is a very versatile tool that should never be overlooked under any circumstances. The facility itself provides a wealth of information regarding the local system that it monitors.

"However, what happens when the system it's monitoring gets compromised?

"When a system becomes compromised, and the intruder obtains elevated root privileges, he now has the ability, as well as the will, to trash any and all evidence leading up to the intrusion, on top of erasing anything else thereafter, including other key system files.

"That's where remote system logging comes in, and it's real super-easy to set up..."

Related Stories:

Linux Journal: Stealthful Sniffing, Intrusion Detection and Logging(Sep 16, 2002)

Crossnodes: Use Snort for Lightweight Intrusion Detection(Jul 15, 2002)

Linux and Main: Preventing File Limit Denials of Service(Jul 10, 2002)

LinuxSecurity.com: Flying Pigs: Snorting Next Generation Secure Remote Log Servers over TCP(Jun 06, 2002)

December 23, 2000 (SANS)

There may be more elaborate third party proprietary solutions for log consolidation, but the syslog capability within UNIX is simple and ubiquitous on UNIX platforms. This paper will specifically deal with the Sun Solaris environment when not noted otherwise.

This paper is intended to assist a data center manager in setting up a centralized syslog server. There are a variety of commercial packages that deal with security and troubleshooting; however the use of the syslog facilities is common to all UNIX systems and most network equipment. The configurations defined here are tested in the Solaris 8 environment.

References :

... ...[1] "Log Consolidation with syslog" by Donald Pitts, December 23, 2000 http://www.sans.org/infosecFAQ/unix/syslog.htm

[2] Understanding and using the Network Time Protocol by Ulrich Windl, et al. http://www.eecis.udel.edu/~ntp/ntpfaq/NTP-a-faq.htm Analysis

"Automated Analysis of Cisco Log Files", Copyright © 1999, Networking Unlimited, Inc. All Rights Reserved http://www.networkingunlimited.com/white007.html

An Approach to UNIX Security Logging, Stefan Axelsson, Ulf Lindqvist, Ulf Gustafson,

Erland Jonsson, In Proceedings of the 21st National Information Systems Security Conference,

pp. 62-75, Oct. 5-8, Crystal City, Arlington, VA, USA, 1998, Available in Postscript and PDF.A Comparison of the Security of Windows NT and UNIX, Hans Hedbom , Stefan Lindskog, Stefan Axelsson, Erland Jonsson, Presented at the Third Nordic Workshop on Secure IT Systems, NORD-SEC'98, 5-6 November, 1998, Trondheim, Norway, Available in PDF.

Download:http://members.xoom.com/chaomaker/linux/xlogmonitor.tgzHomepage: http://members.xoom.com/chaosmaker/linux/XlogMonitor is a tool for monitoring the Linux system logs. It offers monitoring of standard logfiles like /var/log/messages or /var/log/syslog as well as icmpinfo logfiles and memory consumption.

[July 16, 1999] Autobuse Grant Taylor

Autobuse is Perl daemon which identifies probes and the like in logfiles and automatically reports them via email. This is, in a way, the opposite of logcheck in that autobuse identifies known badness and deals with it automatically, while logcheck identifies known goodness and leaves you with the rest.Download: http://www.picante.com/~gtaylor/download/autobuse/

Intrusion Detection

Splunk, which approaches network management by helping IT staff find the proverbial needle in a haystack, says 35,000 people have downloaded its search engine since it launched in August 2005. The company's Splunk Professional search software filters through all the logs and other data generated by IT systems, devices and applications so problems can be found and fixed faster, according to the company. It is priced at $2,500 for an annual license.

LogLogic also attempts to enhance network troubleshooting by capturing logs from all of a corporation's hardware and software in what it calls a log-management intelligence platform. Delivered as an appliance, LogLogic lets customers analyze, store, generate reports on data for compliance and risk mitigation, company officials say. The LogLogic Compliance Suite starts at $10,000.

Log data more relevantWhile he doesn't see log management falling under the definition of network management, RedMonk's Governor says companies such as LogLogic, Splunk and others are making log data more relevant for network managers.

"Network management tends to be real time; log management is after the fact - it's more about looking at what happened and analyzing that," Governor says. "These companies are making log management more of a real-time function, and then it becomes more valuable. It's moving from being a subset of security management to more of an application-management function."

Another company, GroundWork Open Source Solutions, is positioning its IT monitoring tool as costing a fraction of what commercial products go for. GroundWork Monitor Professional, based on open source components, including Nagios, RRDTool and MySQL, gives customers a central point for monitoring applications, databases, servers and network equipment, officials say.

GroundWork Monitor Professional costs about $16,000 for an annual subscription and is installed at "hundreds of enterprises," according to company officials.

Recommended Links

Google matched content

Softpanorama Recommended

Top articles

Sites

Open Directory - Computers Software Internet Site Management Log Analysis

- Commercial (121)

- Freeware

freshmeat.net Browse project tree - Topic Internet Log Analysis

[PPT] Unix Tools for Web log Analysis

Logwatch

www2.logwatch.org

Logwatch is a customizable log analysis system. Logwatch parses through your system's logs for a given period of time and creates a report analyzing areas that you specify, in as much detail as you require. Logwatch is easy to use and will work right out of the package on most systems

Splunk

[Apr. 17, 2006] Splunk Welcome

Splunk is search software that imitates Google search engine functionality on logs. Can be considered as the first specialized log search engine.

It can correlate some alerts:

[Feb 16, 2006] Splunk, Nagios partner on open-source systems-monitoring tools - Computerworldby Todd R. Weiss

Log file search and indexing software vendor Splunk Inc. announced Tuesday that it will soon add systems management host, network and service monitoring capabilities to its software through a partnership with the Nagios open-source project.The new capabilities, which will be added to SplunkТs log file search and indexing applications over the next six months, will give systems administrators even more information to monitor and repair their networks, said Patrick J. McGovern III, chief community Splunker at San Francisco-based Splunk. Splunk has seen more than 25,000 downloads of its software since its 1.0 release came out in November, he said, while Nagios delivers about 20,000 downloads a month of its open-source network and service monitoring application.

The two working together is going to be a big win for both communities,Ф McGovern said.

McGovern is the son of Patrick J. McGovern, the founder and chairman of International Data Group Inc., which publishes Computerworld.

Splunk's free Splunk Server application runs on Linux, Solaris or BSD operating systems but is limited to searching and indexing 500MB of system log files each day. The full version, Splunk Professional Server, starts at $2,500 and goes up to $37,500 for an unlimited capacity. Both products search and index in real time the log files of mail servers, Web servers, J2EE servers, configuration files, message queues and database transactions from any system, application or device. Splunk uses algorithms to automatically organize any type of IT data into events without source-specific parsing or mapping. After the events are classified and any relationships between them discovered, the data is indexed by time, terms and relationships.

The integration of the Splunk applications and the Nagios features will take place in phases over the next six months, McGovern said.

The Nagios software allows monitoring of network services, including SMTP, POP3, HTTP, NNTP and PING, as well as monitoring of processor load, disk and memory usage, and running processes. Users can also monitor environmental factors in data centers such as temperature and humidity.

Other log file monitoring and management applications are available from competitors including Opalis Software Inc. and Prism Microsystems Inc.

Dana Gardner, an analyst at Interarbor Solutions LLC in Gilford, N.H., said Splunk's log file search capabilities bring a novel approach to IT management. Certainly on the theory basis, on the vision basis, it makes a lot of sense, Gardner said. Search can be a really useful tool to help systems administrators figure out a vexing problem amid a huge quantity of data.

It certainly gets at the heart of what keeps IT administrators up at night, managing complexity and chaos, Gardner said.

Harmonious Splunk - Forbes.com

Splunk's corporate-network search tool, released this month, aims to reduce the amount of time it takes datacenter administrators to diagnose problems across a server system. And with playful, techie-familiar terms on its Web site--from "borked" to "whacked"--the company's marketing approach seems oddly appropriate.

"A bunch of us came together three years ago to build a search engine for machine-generated data and make sense of it in real-time--much the same way Web search engines make sense of data on the Internet," says Michael Baum, the company's "chief executive Splunker."

Sound like something Google (nasdaq: GOOG - news - people ) might do? It should--Splunk's core leadership team has worked for many of the Internet's most famous search companies, from Infoseek to Yahoo! (nasdaq: YHOO - news - people ).

When IT systems fail, troubleshooters are often forced to manually parse server logs to find the glitch. Depending on the complexity of the system--and the problem--this can take hours or days, costing companies both in lost revenue and IT staff time.

Many IT specialists call this "spelunking"--mining through cavernous log files across a server system that can potentially add up to millions of pages of poorly formatted text.

The answer, Baum says, is Splunk, a browser-based search tool that mines a virtually unlimited amount of data from log files in real-time and allows system administrators to find trouble points across a network of servers from a single point of entry.

Baum says Splunk differs from traditional log-file analysis software in that it can digest data from virtually any source, ranging from Web applications running on a Sun Microsystems (nasdaq: SUNW - news - people ) machine to database logs on a Dell (nasdaq: DELL - news - people ) server to corporate voice-over-Internet protocol phone networks--even systems that haven't been created yet.

"We realized that we needed to be able to take any stream of data--anything--and be able to index it and allow people to search it," Baum says. The result is universal event processing, which Baum calls a "much more intelligent way of cutting through the hundreds of gigabytes of data that people see in their datacenter every day."

Instead of copying log file data directly into Splunk's database, the software uses algorithms to analyze and standardize the information so administrators can search and link processes from systems the way they're used to searching for things on the Web. Baum says this is especially practical for e-commerce datacenters, where customers often seamlessly bridge dozens of servers on their way from the homepage to checkout and order fulfillment.

Baum says Splunk also embraces collaboration, from its open source code base to a network of "Splunkers," who share information about event analysis on the company's Web site. Corporate versions of the software can also be configured to communicate securely in a peer-to-peer manner, so partner organizations can share information to track incidents with no geographical boundaries.

Also nontraditional is Splunk's approach to marketing, using terms such as "haxx0rd"--a reference to computer hackers--that resonate more with technical-support specialists than a company's vice president of sales. Some material is even racy: Splunk T-shirts urge users to "Take the 'sh' out of IT."

"All of our marketing and imaging is targeted at trying to be the brand that can try to win the hearts and the souls of systems administrators," Baum says.

While Baum says he can't yet disclose any organizations that use the product, he says thousands of people have downloaded the software, and the response has been positive. Users can download Splunk for free and companies can purchase Splunk Professional, a premium service that includes technical support and advanced features.

"We're a company that's building our whole business from a grassroots standpoint, trying to get systems administrators to download and try our software, even at home, and then bring it into work--we're building from the ground up rather than from the top down," he says.

Baum says Splunk plans to grow the same way search companies like Google did, starting with a simple, effective tool and expanding it to include more features over time. And if Splunk works as well at de-"borking" as Baum says it does, his strategy might just work.

Perl-Based Tools

Devialog by Jeff Yestrumskas

Perl-based.

About:

devialog is a behavior/anomaly/signature-based syslog intrusion detection system which can detect new, unknown attacks. It fits comfortably in a heterogeneous Unix/Linux/*BSD environment at the core of a central syslog server. devialog generates its own signatures and acts upon anomalies as configured by the system administrator. In addition, devialog can function as a traditional syslog parsing utility in which known signatures trigger actions.Release focus: Minor bugfixes

Changes:

Bug fixes include better handling of lines with some special characters. A timing error was fixed within alert generation: sometimes alerts would be sent inadvertently based on the timing of a new log arriving as an alert was sent out in specific high-volume log situations. Altered signature generation creates more exact regular expressions.Epylog by Konstantin Ryabitsev Perl-based.

Epylog is a log notifier and parser that periodically tails system logs on Unix systems, parses the output in order to present it in an easily readable format (parsing modules currently exist only for Linux), and mails the final report to the administrator. It can run daily or hourly. Epylog is written specifically for large clusters where many systems log to a single loghost using syslog or syslog-ng.

LooperNG Perl-based, by Mohit Muthanna

LooperNG is an intelligent event routing daemon. Primarily used for Network Management, this application can be used to accomplish a variety of tasks related to logging and alerting such as trap forwarding/exploding, event enrichment, converting event formats (syslog->SNMP, SNMP->flatfile, syslog->Netcool), etc. It uses a system of input and output modules to interface with the event sources/sinks and a "rules file" to control the flow of the events.

Logrep A logfile extraction and reporting system by Tevfik Karagulle

Logrep is a secure multi-platform framework for the collection, extraction, and presentation of information from various log files. It features HTML reports, multi dimensional analysis, overview pages, SSH communication, and graphs, and supports 18 popular systems including Snort, Squid, Postfix, Apache, Sendmail, syslog, ipchains, iptables, NT event logs, Firewall-1, wtmp, xferlog, Oracle listener and Pix.

- Changelog:

- Download: http://www.l0t3k.net/tools/Loganalysis/LogrepSource-1.4.2.tar.gz

- License: GNU General Public License

- Platform(s): Windows NT/2000, Linux

Swatch

Monitors and filters log files and executes a specified action depending of pattern in the log. BAsed on Perl but actually designed for users who do not know Perl. Does not makes any sense for users who know Perl.

Multipurpose plug-in based analyzers

Sawmill log analyzer; log file analysis; log analysis program

Sawmill is a world-class web log analyzer, but it's more than just that. Sawmill can analyze all of your log files, from all of your servers. Sawmill can analyze

- Streaming media logs: RealPlayer, Microsoft Media, and Quicktime Streaming Server.

- FTP logs from most FTP servers.

- Proxy logs from most proxy servers.

- Firewall logs from most firewalls.

- Cache logs from most cache servers.

- Network logs: Cisco PIX, IOS; tcpdump, and more.

- Mail logs: SMTP, POP, IMAP from most mail servers.

- And dozens of others!

Sawmill is a universal log analysis tool; it's not limited to web logs. You can use it to track usage statistics on your proxy and cache servers. You can use it to bill by bandwidth. You can use it to monitor all of the TCP/IP traffic on your internal network, or through your firewall, to see who's eating all that expensive bandwidth you just bought. As an ISP/ASP, you'll find dozens of uses for Sawmill beyond just providing your customers with the best statistics.

And that's not all! Sawmill lets you define your own log format, using a very powerful format description mechanism that can be used to describe just about any possible log format. Do you have your own special logs that you'd like to analyze? Have you written a custom server that no other tool knows anything about? In a few minutes, you can have Sawmill processing those files just as easily as it processes web logs (in fact, we'll happily write the log format descriptor file, and include it with the next version of Sawmill). All the advantages it has in web statistics, it has with all other logs -- you'll get unprecedented detail and power for your statistics, no matter where the log files came from.

Lire A pluggable log analyzer which supports over 30 log types.

Most internet services have the ability to log their activity. For example, the Apache web server adds for each web page request a line with information to a log file. Depending on the log format the line includes information like the page that was requested, the size of the page, which web browser was used, and much more. In case of your email server, a similar log file is made. It contains the emailaddress that sent the email, who received it, how large it was, etc. As a matter of fact, all internet services have this capability.

These log files contain a enormous amount of information, but the format is hard to interpret by hand. You need a tool that makes summaries of the data to help you analyze the content. In case of www services this converts to TopX lists for web browsers, domains and platforms, and a hits versus time plot. Most counters via third parties show these kinds of overviews.

For most log file type tools are available to analyze the content. Lire is such a tool. But Lire is different from most other tools. Lire is an integrated system which is able to analyze not just one type of internet service, but many. And the reports that summarize the interesting information from the log file are plugged in. You can add custom report types yourselves.

Lire can be used in different ways. You can run it from the command line or have a crontab job installed that send you reports by email. In the former case you can choose the output format for the report. Current output formats include plain text, HTML, DocBook, PDF and LogML. In case of the crontab the only format is plain text at this moment.

Currently, the log files for these services can be analyzed:

Lire is in full development, currently with three payed people working on it. Support is one of their tasks, so if you have a special request (new services for example) or general support questions please leave a message on LogReport's SourceForge site.

Commercial support Welcome to LogReport: Log Reporting for the 21st Century

The LogReport Project is a technology initiative launched nearly two years ago to gather information/best practices on log analysis and produce premium software to perform these tasks. Today the LogReport Project's flagship software product, Lire, provides reliable, extensible solutions to server and network operators around the world. In addition, the LogReport team, a group of international experts, provides world-class professional services, insuring that our clients have the absolute best solution for their needs.

Email Servers:

- Sendmail

- Postfix

- qmail

- exim

- nms (Netscape Messanger Service)

- ArGoSoft

Message Store:

- Netscape Message Store

- Netscape Messaging Multiplexor

WWW Servers:

- Common Log Format (Apache, IIS, etc.)

- Combined Log Format (Apache, Boa, etc.)

- Referrer

- Apache mod_gzip

- W3C Extended (Microsoft IIS 4.0 & 5.0)

DNS Queries:

- DNS Bind version 8

- DNS Bind version 9

DNS Zones:

- DNS Bind version 8

Firewalls:

- Cisco

- Cisco PIX

- ipchains

- ipfilter

- iptables

- WELF

- Watchguard

FTP Servers:

- xferlog (WU-FTPD, ProFTPD, etc)

- IIS FTP

Print Servers:

- CUPS

- LPRng

Proxies:

- Squid

- WELF

- MS ISA

Databases:

- MySQL

- PostgreSQL

Syslogs:

- BSD-like

- Netscape Messaging Server

- Solaris 8

- Kiwi Syslog Daemon

- Sendmail Switch Log

Dialup Products:

- ISDN Log

Recommended Papers

Sawmill log analyzer; log file analysis; log analysis program

- Easy To Use

- Extensive Documentation

- Live Reports & Graphs

- Package of Powerful Analysis Tools

- Attractive Statistics

- Database Driven

- Advanced User Tracking by WebNibbler(tm)

- Very Fast

- Easy To Install

- Highly Configurable

- Works With a Variety of Platforms

- Processes Almost Any Log File

[Nov 11, 2000] The OutRider Computing Journal: Creating a Log Class in Perl

"One recurrent theme in my job as a database administrator/assistant systems administrator/systems analyst is the need to keep track of what happened on the systems while I wasn't watching. What did the cron job do last night. What did all those spooler daemons do while I was at lunch? In other words logging. It bothered me that there was a lack of simple tools for doing such a simple, redundant job. So, I set out to do build some myself. My systems programming tool of choice is Perl, so, that is language I chose for the project. This journey took me out of my normal routine of straight-line Perl programming and dumped me in the land of Modules and Object Oriented Perl. I'm glad to say it didn't overwhelm me and in fact I found it rather easy to write."

"My first order of business was to take my old standby logging routines and objectify them. I had several concise routines that I would either import into the main package through a use statement or just simply copy/paste depending on my mood and what I was doing. They consisted of four routines: start_logging, stop_logging, restart_logging and log."