|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

|

|

As Hamlet observe during a different era "Something is rotten in the state of Denmark." It looks like the field now is ruled by the coalition of "complexity junkies" and "more cores is better" junkies.

Complexity of Kubernetes is such that even "bare-metal" installation of Kubernetes often is an overkill for HPC cluster and leads to waste of resources and slowing down of computations. So "classic" HPC configuration with scheduler still did not reached end of life point and such cluster are still competitive. Kubernetes initially was designed by Google as a load-balancing cluster, not as the HPC cluster.

Also more is not always better. The technical problems of serving, say, over 1K computational nodes from a single shared filesystem are tremendous. Scaling down cluster is often beneficial as due to Amdahl law (see below) almost no jobs benefit from running on, say, 512 cores and higher. And for such jobs there are GPUs, which in this case are a much better deal.

|

|

If "private cloud" solution is used situation is even worse and waste of resources and excessive complexity is higher. While container ecosystem is useful and solves some problems with Linux libraries (library hell) that exists in "classic" HPC clusters, the scope of those problems is small and does not justify losses in productivity and excessive complexity.

Classic HPC environment is based on two main components: common, shared filesystem and HPC scheduler. See for example

In the simplest form cluster is a set of nodes (each node is typical two socket server with higher end multicore CPU; although the high number of cores is not beneficial and even is detrimental for some application, for example for bioinformatics application and they can benefit form high clock speed of 4 core CPUs). For higher number of cores the problem of overheating is acute and requires water cooling solutions.

They are connected via high speed interconnect to server or servers that implement shared filesystem.

Grid computing is a broader term that cluster and typically refers to a set of (not necessary uniform like in cluster) servers connected to common scheduler, for example Sun Grid Engine and common storage. From this point of view HPC is a special case of grid computing

The key for HPC is the implementation of shares storage that allows functioning of a given number of nodes. A lot depends of the doman of application run on the cluster. many application do not require large shared storage to run, or they requre large storage to run but do not use MPI.

Two most common parallel filesystems currently used are Lustra and GPFS.

For larger number of nodes and if MPI is used Infiniband typically is used. For bioinformatics applications which do not use MPI Infiniband is not necessary, but is advisable because it improves the parameters of shared filesystem. GPFS can function directly on Infiniband layer without use of TCP/IP and that is a huge advantage for some applications.

Infiniband is a prerequisite for application that use MPI because of law latency but with the advent of 64 core CPUs (which means 128 core of two socket server and 256 threads if hyper threading is enabled ) the role of MPI to facilitate cross nodes communication is open to review. Truth be told MPI in HPC clusters is one of the most often abused subsystems because many researchers have no clue about optimum number of cores for their application and overspecify the number of cores several times, just because they can.

This situation is called "waiving dead chicken" and researchers working in computational chemistry (or "computational alchemistry;-) are typical members of the group that pracice this kind of abuse of HPC resources. Many of them have no or little clue about applications they use and they can get useful results form thier computations only by chance. Running jobs with large number of cores is a survival mechanism for them, no more no less. In general usefulness of many results in computational chemistry obtained on HPC clusters is subject to review ;-) .

I would like to stress that along with large memory nodes servers with high CPU clock speed (over 4GHz) are also legoitimate member of HPC sluters and shoudl be provided to researchers. Many genomic applications are single threaded and for such applicatins you do not need two socket server -- a single four or eight core CPUs with high clock frequency are much better then multicore monsters that Intel heavily pushed now. Often genomic job are running perfectly well on one socket workstation and buying for them two socket server is complete idiotism, which actually flourishes in modern organizations run by bean counters.

In this sense the current trend toward building clusters with most computational nodes using CPU with close to max available cores is a fallacy. Expensive fallacy. Cluster should be specialized for the set of applications it predominantly handles and for each application sweet spot of the number of cores exists -- this number should the base for selection of the servers for non-MPI jobs. 64 core servers are attractive for PowerPoint presentation but in reality they have several fundamental problems with the architecture of PC-compatible (Intel/AMD) servers.

Heat dissipation of multi-core CPU is a serious engineering challenge. Starting from probably 32 cores only liquid cooling is more or less adequate. And often even it can be inadequate for large loads and one need some special engineering solutions for this problem. Intel is working on such solutions.

Overheating of cores of servers running computationally intensive jobs is a really serious problem for clusters build using compact form factor for servers such as blades from Dell or HP. The compact form factor has inadequate heat dissipation. As the result they overheat, efficiency drops and all gain from additional cores disappear.

For the same reason using VMware for HPC clusters is a fallacy as it tends to increase this effect. The same consideration is application to the servers using in the major cloud providers such as AWS and Azure -- for them too overheating is a problem but it is completely hidden from the view as you do not have access to the server logs.

Parallel programming (like all programming) is as much art as science, always leaving room for major design improvements and performance enhancements. Software and hardware should go hand in hand when it comes to achieving high performance on a cluster. Programs must be written to explicitly take advantage of the underlying hardware. For some algorithms this is possible. For other it is not.

Limiting the size of the cluster helps as in smaller cluster parallel filesystem functions better and provides higher bandwidth to computational nodes.

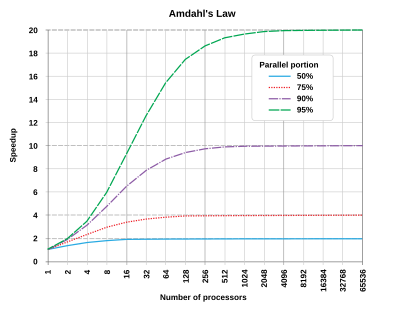

Parallel programming mostly is limited to a certain subclass of computational algorithms Many algorithms can't be made parallel beyond relatively small number of cores (say 4, 8 and 16). Even for those that can be paralyzed efficiently over this limit the time spend per core often increased after a certain number of cores ("saturation"). This effect is connected with so called Amdahl named after one of the "founding fathers" of IBM system/360 Gene Amdahl (thanks to him we have 8 bit byte and byte addressing), and was presented at the AFIPS Spring Joint Computer Conference in 1967. This effect is very pronounced for Accelrys, VASP and several other common commercial programs. Jobs for which often are run by clueless researchers with the excessive number of cores.

| Simplifying, Amhdal law postulates that that in most cases the theoretical speedup from parallelization is limited to 20 times the single thread performance no matter how many CPUs you use. |

Suppose 1/N of the total time taken by a program in a part that can not be parallelized, and the rest (1-1/N) is in the parallelizable part. In this case the speedup always has a distinct "saturation" point and is governed by the following curves (see Amdahl's law - Wikipedia). Simplifying it postulates that that in most cases the theoretical speedup is limited to 20 times the single thread performance:

In theory you could apply an infinite amount of hardware to do the parallel part in zero time, but the sequential part will see no improvements. As a result, the best you can achieve is to execute the program in 1/N of the original time, but no faster. In parallel programming, this fact is commonly referred to as Amdahl's Law.

Amdahl's Law governs the speedup of using N parallel processors on a problem versus using only one serial processor. Speedup is defined as the time it takes a program to execute in serial (with one processor) divided by the time it takes to execute in parallel (with many processors):

T(1)

S = ------

T(j)

Where T(j) is the time it takes to execute the program when using j processors.

The real hard work in writing a parallel program is to make N as large as possible. But there is an interesting twist to it. You normally attempt bigger problems on more powerful computers, and usually the proportion of the time spent on the sequential parts of the code decreases with increasing problem size (as you tend to modify the program and increase the parallelizable portion to optimize the available resources).

| Gigantomania (from Ancient Greek γίγας gigas, "giant" and μανία mania, "madness") is the production of unusually and superfluously large works.[1] Gigantomania is in varying degrees a feature of the political and cultural lives of prehistoric and ancient civilizations (Megalithic cultures, Ancient Egypt, Ancient Rome, Ancient China, Aztec civilization), several totalitarian regimes (Stalin's USSR, Nazi Germany, Fascist Italy, Maoist China, Juche Korea),[1] as well as of contemporary capitalist countries (notably for skyscrapers and shopping malls). |

Carefully hidden fact is that that Gigantomania rules the HPC space. First of all the criteria of measuring HPC cluster productively (petaflops measured using completely artificial for real cluster loads and infinitely scalable Linpack benchmark) is highly suspect and have little on nothing to do with real application running on many clusters.

In this sense TOP500 list is more strongly correlates with the level of influence of military industrial complex on the country leadership, not so much with the progress in building useful clusters (although along with modeling on nuclear explosions there are few application for example related to weather forecasts that benefit from very high parallelization of computations).

The of small clusters (way with less then 64 computational nodes) on which a lot useful work can be done is deprecated and in many organization that are completely eliminated although small clusters allow high bandwidth per computation nodes then larger cluster.

Clusters are built to perform massive computations, and you need to know how fast they are. Standard benchmark based on LINPACK calculations is completely bizarre and false measure in many areas but one: the class of applications with unlimited parallelism.

The real applications usually do not scale above, say 128 cores well and many does not scale above 32 cores. So megaflop value used for large cluster and in Top500 index is just "art for the sake of art" and has very little connection to reality. It is a completely fake measure that suggests that more nodes and more cores are better.

That far far from being true. If your application does not scale above say 16 node that only thing you can do is to run many instances of such application in parallel. Of course, this can have great value as you can put more dots on some curve in a single day running computation is slightly different sets of data filling the chosen interval that you need to explore. But you will never get a better duration of a single computation then of much more modest cluster, that cost tiny fraction (say 1/10th) of the supercluster cost.

IBM is one firm that propagated this nonsense with LINPACK as the key benchmark of cluster performance. This test is fake in a sense that it assumes unlimited parallelism:

Cluster is generally is as good as each single computational node and megoflops tent to push designed toward larger amount of cores per CPU, which is completely wrong approach. It's common to think that the processor frequency determines performance. While this is true to a certain extent, it is of little value in comparing processors from different vendors or even different processor families from the same vendor because different processors do different amounts of work in a given number of clock cycles. This was especially obvious when we compared vector processors with scalar processors (see Part 1). Speed of memory also matter.

A more natural way to compare performance is to run some standard tests. Over the years a test known as the LINPACK benchmark has become a gold standard when comparing performance of HPC clusters. It was written by Jack Dongarra more than a decade ago and is still used by top500.org (see Resources for a link).

This test involves solving a dense system of N linear equations, where the number of floating-point operations is known (of the order of N^3). This test is well suited to speed test computers meant to run scientific applications and simulations because they tend to solve linear equations at some stage or another.

The standard unit of measurement is the number of floating-point operations or flops per second (in this case, a flop is either an addition or a multiplication of a 64-bit number). The test measures the following:

- Rpeak, theoretical peak flops. In a June 2005 report, IBM Blue Gene/L clocks at 183.5 tflops (trillion flops).

- Nmax, the matrix size N that gives the highest flops. For Blue Gene this number is 1277951.

- Rmax, the flops attained for Nmax. For Blue Gene, this number is 136.8 tflops.

To appreciate these numbers, consider that IBM BlueGene/L can compute in one second that task that on your home computer may take up to five days.

This phrase "IBM BlueGene/L can compute in one second that task that on your home computer may take up to five days" is complete nonsense in view of the Amdahl law. Probably such applications exist, but they are very rare. Here IBM is simply dishonest.

Clearly, it is hard to maintain a very large cluster. It requires special subsystems such as cluster manager (For example Bright Computing cluster mananger). Everything becomes a problem. It is not convenient to copy files to every node, set up SSH and MPI on every node that gets added, make appropriate changes when a node is removed, and so on.

For small clusters such problem are several magnitude less. Moreover for small cluster you can reuse some integrated solution such as Rocks, which provides most of the things we need for the cluster and automate some of the typical tasks. When it comes to managing a cluster in a production environment with a large user base, job scheduling and monitoring are crucial.

They include

Sun grid engine (now Oracle grid engine) can be used a powerful scheduler for computational clusters. Another popular scheduling system is OpenPBS, and Torque. Using it you can create queues and submit jobs on them. Like SGE both have open source versions along with the commercial versions.

You can also create sophisticated job-scheduling policies.

All "grid" schedulers let you view executing jobs, submit jobs, and cancel jobs. It also allows control over the maximum amount of CPU time available to a particular job, which is quite useful for an administrator.

An important aspect of managing clusters is monitoring, especially if your cluster has a large number of nodes. Several options are available, such as Nagios or Ganglia

Ganglia has a Web-based front end and provides real-time monitoring for CPU and memory usage; you can easily extend it to monitor just about anything. For example, with simple scripts you can make Ganglia report on CPU temperatures, fan speeds, etc.

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

Jun 30, 2021 | www.theregister.com

Companies rely on estimates that are often wrong, says CNCF Tim Anderson Tue 29 Jun 2021 // 16:28 UTC

20

A report on Kubernetes expenditure from the Cloud Native Computing Foundation (CNCF), in association with the FinOps Foundation, shows that costs are rising and companies struggle to predict them accurately.

The new report is based on a survey of the CNCF and FinOps Foundation communities and although it had only 195 respondents, there was a "strong enterprise representation".

Spending on Kubernetes was up, according to the survey, with 67 per cent reporting an increase of 20 per cent or more over the last 12 months, and 10 per cent spending more than $1m per month on their deployments.

Kubernetes, as Reg readers know, is a means of orchestrating containerised applications, so its actual cost is mainly that of the resources it consumes: over 80 per cent compute (such as VMs on AWS, GCP or Azure) and a bit on memory, storage, networking and so on.

What's the FinOps Foundation? The FinOps Foundation was set up in 2019 by Cloudability, a cloud financial management company now part of Aptio.In 2020 it became part of the Linux Foundation, alongside Kubernetes overseer Cloud Native Computing Foundation, and aims to "advance the discipline of cloud financial management." Sponsors include Aptio, Atlassian, VMware and Google Cloud.

Basically, it is a kind of alliance of those hoping to manage and reduce their cloud spend, including Nationwide, Spotify and Sainbury's, with companies that want to sell you cost management solutions, though the Foundation has promised to focus on "best practices beyond vendor tooling."

The cost of such things as training IT staff and maintaining complex deployments was sadly not a focus of the report. Rather, its key point was that many organisations have only a loose grip on what they are spending.

"The vast majority of respondents either do not monitor Kubernetes spending at all (24 per cent), or they rely on monthly estimates (44 per cent)," the report said.

May 31, 2021 | hardware.slashdot.org

Posted by EditorDavid on Monday May 31, 2021 @07:34AM from the in-the-chips dept. Slashdot reader 4wdloop shared this report from NVIDIA's blog, joking that maybe this is where all NVIDIA's chips are going:

It will help piece together a 3D map of the universe, probe subatomic interactions for green energy sources and much more. Perlmutter, officially dedicated Thursday at the National Energy Research Scientific Computing Center (NERSC), is a supercomputer that will deliver nearly four exaflops of AI performance for more than 7,000 researchers. That makes Perlmutter the fastest system on the planet on the 16- and 32-bit mixed-precision math AI uses. And that performance doesn't even include a second phase coming later this year to the system based at Lawrence Berkeley National Lab.

More than two dozen applications are getting ready to be among the first to ride the 6,159 NVIDIA A100 Tensor Core GPUs in Perlmutter, the largest A100-powered system in the world. They aim to advance science in astrophysics, climate science and more. In one project, the supercomputer will help assemble the largest 3D map of the visible universe to date. It will process data from the Dark Energy Spectroscopic Instrument ( DESI ), a kind of cosmic camera that can capture as many as 5,000 galaxies in a single exposure. Researchers need the speed of Perlmutter's GPUs to capture dozens of exposures from one night to know where to point DESI the next night. Preparing a year's worth of the data for publication would take weeks or months on prior systems, but Perlmutter should help them accomplish the task in as little as a few days.

"I'm really happy with the 20x speedups we've gotten on GPUs in our preparatory work," said Rollin Thomas, a data architect at NERSC who's helping researchers get their code ready for Perlmutter. DESI's map aims to shed light on dark energy, the mysterious physics behind the accelerating expansion of the universe.

A similar spirit fuels many projects that will run on NERSC's new supercomputer. For example, work in materials science aims to discover atomic interactions that could point the way to better batteries and biofuels. Traditional supercomputers can barely handle the math required to generate simulations of a few atoms over a few nanoseconds with programs such as Quantum Espresso. But by combining their highly accurate simulations with machine learning, scientists can study more atoms over longer stretches of time. "In the past it was impossible to do fully atomistic simulations of big systems like battery interfaces, but now scientists plan to use Perlmutter to do just that," said Brandon Cook, an applications performance specialist at NERSC who's helping researchers launch such projects. That's where Tensor Cores in the A100 play a unique role. They accelerate both the double-precision floating point math for simulations and the mixed-precision calculations required for deep learning.

Dec 10, 2020 | blog.centos.org

Dave-A says: December 9, 2020 at 7:34 am

My (very large, aerospace) employer is dropping RHEL for Oracle Linux, via in-place upgrade. That seems to be more and more a good idea... Is Scientific still out there as a RHEL clone?liam nal says: December 9, 2020 at 8:15 amScientific Linux is only available for EL7. They decided against doing anymore builds for major releases for whatever reason. If you are interested in a potential successor, keep your eyes out RockyLinux.V says: December 9, 2020 at 8:30 amNo, Scientific was discontinued in April 2019. I believe they decided to use CentOS 8.0 instead of releasing a new version of Scientific.dovla091 says: December 9, 2020 at 11:45 amwell I guess they will start the project again...Jan-Albert van Ree says: December 9, 2020 at 5:04 pmOh how I hope this to be true ! We have several HPC clusters and have been using SL5, SL6 and SL7 for the last 10 years and are about to complete the migration to CentOS8 in January. We will complete that migration, too much already invested and leaving it at SL7 is a bigger problem , but this has definitely made us rethink our strategy for the next years.

Jan 29, 2021 | finance.yahoo.com

So it now owns the only commercial SGE offering along with PBSpro. Univa Grid Engine will now be referred to as Altair Grid Engine.

Altair will continue to invest in Univa's technology to support existing customers while integrating with Altair's HPC and data analytics solutions. These efforts will further enhance the capability and performance requirements for all Altair customers and solidify the company's leadership in workload management and cloud enablement for HPC. Univa has two flagship products:

· Univa ® Grid Engine ® is a leading distributed resource management system to optimize workloads and resources in thousands of data centers, improving return-on-investment and delivering better results faster.

Dec 27, 2020 | groups.io

Karl W. Schulz Dec 18 #3955

Hi all,

Just wanted to chime in on this discussion to mention that the OpenHPC technical steering committee had a chance to get together earlier this week and, as you might imagine, the CentOS news was a big topic of conversation. As a result, we have drafted a starting response to the OpenHPC community which is available at the following url:

https://github.com/openhpc/ohpc/wiki/files/OpenHPC-CentOS-Response.pdf

For the short term, it is business as usual, but we will be evaluating multiple options and hope to have more technical guidance once we see how things shake out.

-k

Feb 26, 2019 | www.softpanorama.org

Adapted for HPC clusters by Nikolai Bezroukov on Feb 25, 2019

Status of this MemoThis memo provides information for the HPC community. This memo does not specify an standard of any kind, except in the sense that all standards must implicitly follow the fundamental truths. Distribution of this memo is unlimited.AbstractThis memo documents seven fundamental truths about computational clusters.AcknowledgementsThe truths described in this memo result from extensive study over an extended period of time by many people, some of whom did not intend to contribute to this work. The editor would like to thank the HPC community for helping to refine these truths.1. IntroductionThese truths apply to HPC clusters, and are not limited to TCP/IP, GPFS, scheduler, or any particular component of HPC cluster.2. The Fundamental Truths(1) Some things in life can never be fully appreciated nor understood unless experienced firsthand. Most problems in a large computational clusters can never be fully understood by someone who never run a cluster with more then 16, 32 or 64 nodes.(2) Every problem or upgrade on a large cluster always takes at least twice longer to solve than it seems like it should.

(3) One size never fits all, but complexity increases non-linearly with the size of the cluster. In some areas (storage, networking) the problem grows exponentially with the size of the cluster.(3a) Supercluster is an attempt to try to solve multiple separate problems via a single complex solution. But its size creates another set of problem which might outweigh the set of problem it intends to solve. .(4) On a large cluster issues are more interconnected with each other and a typical failure often affects larger number of nodes or components and take more effort to resolve(3b) With sufficient thrust, pigs fly just fine. However, this is not necessarily a good idea.

(3c) Large, Fast, Cheap: you can't have all three.

(4a) Superclusters proves that it is always possible to add another level of complexity into each cluster layer, especially at networking layer until only applications that use a single node run well.(5) Functioning of a large computational cluster is undistinguishable from magic.(4b) On a supercluster it is easier to move a networking problem around, than it is to solve it.

(4c)You never understand how bad and buggy is your favorite scheduler is until you deploy it on a supercluster.

(4d) If the solution that was put in place for the particular cluster does not work, it will always be proposed later for new cluster under a different name...

(5a) User superstition that "the more cores, the better" is incurable, but the user desire to run their mostly useless models on as many cores as possible can and should be resisted.(5b) If you do not know what to do with the problem on the supercluster you can always "wave a dead chicken" e.g. perform a ritual operation on crashed software or hardware that most probably will be futile but is nevertheless useful to satisfy "important others" and frustrated users that an appropriate degree of effort has been expended.

(5c) Downtime of the large computational clusters has some mysterious religious ritual quality in it in modest doze increases the respect of the users toward the HPC support team. But only to a certain limit.

(6) "The more cores the better" is a religious truth similar to the belief in Flat Earth during Middle Ages and any attempt to challenge it might lead to burning of the heretic at the stake.

(6a) The number of cores in the cluster has a religious quality and in the eyes of users and management has power almost equal to Divine Spirit. In the stage of acquisition of the hardware it outweighs all other considerations, driving towards the cluster with maximum possible number of cores within the allocated budget Attempt to resist buying for computational nodes faster CPUs with less cores are futile.

(6b) The best way to change your preferred hardware supplier is buy a large computational cluster.

(6c) Users will always routinely abuse the facility by specifying more cores than they actually need for their runs

(7) For all resources, whatever the is the size of your cluster, you always need more.

(7a) Overhead increases exponentially with the size of the cluster until all resources of the support team are consumed by the maintaining the cluster and none can be spend for helping the users.

(7b) Users will always try to run more applications and use more languages that the cluster team can meaningfully support.(7c) The most pressure on the support team is exerted by the users with less useful for the company and/or most questionable from the scientific standpoint applications.

(7d) The level of ignorance in computer architecture of 99% of users of large computational clusters can't be overestimated.

Security Considerations

This memo raises no security issues. However, security protocols used in the HPC cluster are subject to those truths.References

The references have been deleted in order to protect the guilty and avoid enriching the lawyers.

www.thegeekdiary.com

The DRBD (stands for Distributed Replicated Block Device ) is a distributed, flexible and versatile replicated storage solution for Linux. It mirrors the content of block devices such as hard disks, partitions, logical volumes etc. between servers.It involves a copy of data on two storage devices, such that if one fails, the data on the other can be used.

You can think of it somewhat like a network RAID 1 configuration with the disks mirrored across servers. However, it operates in a very different way from RAID and even network RAID.

Originally, DRBD was mainly used in high availability (HA) computer clusters, however, starting with version 9, it can be used to deploy cloud storage solutions. In this article, we will show how to install DRBD in CentOS and briefly demonstrate how to use it to replicate storage (partition) on two servers.

... ... ...

For the purpose of this article, we are using two nodes cluster for this setup.

- Node1 : 192.168.56.101 tecmint.tecmint.lan

- Node2 : 192.168.10.102 server1.tecmint.lan

... ... ...

Reference : The DRBD User's Guide .Summary

Jan 19, 2019 | www.tecmint.com

DRBD is extremely flexible and versatile, which makes it a storage replication solution suitable for adding HA to just about any application. In this article, we have shown how to install DRBD in CentOS 7 and briefly demonstrated how to use it to replicate storage. Feel free to share your thoughts with us via the feedback form below.

Jan 08, 2019 | blog.centos.org

Recent events

In November, we had a small presence at SuperComputing 18 in Dallas. While there, we talked with a few of the teams participating in the Student Cluster Competition. As usual, student supercomputing is #PoweredByCentOS, with 11 of the 15 participating teams running CentOS. (One Fedora, two Ubuntu, one Debian.)

Our congratulations go out to the team from Tsinghua University, who won this year's competition !

Jan 08, 2019 | blog.centos.org

I'm at SC18 - the premiere international supercomputing event - in Dallas, Texas. Every year at this event, hundreds of companies and universities gather to show what they've been doing in the past year in supercomputing and HPC.

As usual, the highlight of this event for me is the student cluster competition. Teams from around the world gather to compete on which team can make the fastest, most efficient supercomputer within certain constraints. In particular, the machine must be built from commercially available components and not consume more than a certain amount of electrical power while doing so.

This year's teams come from Europe, North America, Asia, and Australia, and come from a pool of applicants of hundreds of universities who have been narrowed down to this list.

Of the 15 teams participating, 11 of them are running their clusters on CentOS. There are 2 running Ubuntu, one Running Debian, and one running fedora. This is, of course, typical at these competitions, with Centos leading as the preferred supercomputing operating system.

Dec 16, 2018 | liv.ac.uk

Index of /downloads/SGE/releases/8.1.9

This is Son of Grid Engine version v8.1.9. See <http://arc.liv.ac.uk/repos/darcs/sge-release/NEWS> for information on recent changes. See <https://arc.liv.ac.uk/trac/SGE> for more information. The .deb and .rpm packages and the source tarball are signed with PGP key B5AEEEA9. * sge-8.1.9.tar.gz, sge-8.1.9.tar.gz.sig: Source tarball and PGP signature * RPMs for Red Hat-ish systems, installing into /opt/sge with GUI installer and Hadoop support: * gridengine-8.1.9-1.el5.src.rpm: Source RPM for RHEL, Fedora * gridengine-*8.1.9-1.el6.x86_64.rpm: RPMs for RHEL 6 (and CentOS, SL) See <https://copr.fedorainfracloud.org/coprs/loveshack/SGE/> for hwloc 1.6 RPMs if you need them for building/installing RHEL5 RPMs. * Debian packages, installing into /opt/sge, not providing the GUI installer or Hadoop support: * sge_8.1.9.dsc, sge_8.1.9.tar.gz: Source packaging. See <http://wiki.debian.org/BuildingAPackage>, and see <http://arc.liv.ac.uk/downloads/SGE/support/> if you need (a more recent) hwloc. * sge-common_8.1.9_all.deb, sge-doc_8.1.9_all.deb, sge_8.1.9_amd64.deb, sge-dbg_8.1.9_amd64.deb: Binary packages built on Debian Jessie. * debian-8.1.9.tar.gz: Alternative Debian packaging, for installing into /usr. * arco-8.1.6.tar.gz: ARCo source (unchanged from previous version) * dbwriter-8.1.6.tar.gz: compiled dbwriter component of ARCo (unchanged from previous version) More RPMs (unsigned, unfortunately) are available at <http://copr.fedoraproject.org/coprs/loveshack/SGE/>.

Dec 16, 2018 | github.com

docker-sge

Dockerfile to build a container with SGE installed.

To build type:

git clone [email protected]:gawbul/docker-sge.git cd docker-sge docker build -t gawbul/docker-sge .To pull from the Docker Hub type:

docker pull gawbul/docker-sgeTo run the image in a container type:

docker run -it --rm gawbul/docker-sge login -f sgeadminYou need the

login -f sgeadminas root isn't allowed to submit jobsTo submit a job run:

echo "echo Running test from $HOSTNAME" | qsub

Dec 16, 2018 | hub.docker.com

Docker SGE (Son of Grid Engine) Kubernetes All-in-One Usage

Kubernetes Step-by-Step Usage

- Setup Kubernetes cluster, DNS service, and SGE cluster

Set

KUBE_SERVER,DNS_DOMAIN, andDNS_SERVER_IPcurrectly. And run./kubernetes/setup_all.shwith number of SGE workers.export KUBE_SERVER=xxx.xxx.xxx.xxx export DNS_DOMAIN=xxxx.xxxx export DNS_SERVER_IP=xxx.xxx.xxx.xxx ./kubernetes/setup_all.sh 20- Submit Job

kubectl exec sgemaster -- sudo su sgeuser bash -c '. /etc/profile.d/sge.sh; echo "/bin/hostname" | qsub' kubectl exec sgemaster -- sudo su sgeuser bash -c 'cat /home/sgeuser/STDIN.o1'- Add SGE workers

./kubernetes/add_sge_workers.sh 10Simple Docker Command Usage

- Setup Kubernetes cluster

./kubernetes/setup_k8s.sh- Setup DNS service

Set

KUBE_SERVER,DNS_DOMAIN, andDNS_SERVER_IPcurrectlyexport KUBE_SERVER=xxx.xxx.xxx.xxx export DNS_DOMAIN=xxxx.xxxx export DNS_SERVER_IP=xxx.xxx.xxx.xxx ./kubernetes/setup_dns.sh- Check DNS service

- Boot test client

kubectl create -f ./kubernetes/skydns/busybox.yaml

- Check normal lookup

kubectl exec busybox -- nslookup kubernetes

- Check reverse lookup

kubectl exec busybox -- nslookup 10.0.0.1- Check pod name lookup

kubectl exec busybox -- nslookup busybox.default- Setup SGE cluster

Run

./kubernetes/setup_sge.shwith number of SGE workers../kubernetes/setup_sge.sh 10- Submit job

kubectl exec sgemaster -- sudo su sgeuser bash -c '. /etc/profile.d/sge.sh; echo "/bin/hostname" | qsub' kubectl exec sgemaster -- sudo su sgeuser bash -c 'cat /home/sgeuser/STDIN.o1'- Add SGE workers

./kubernetes/add_sge_workers.sh 10

- Load nfsd module

modprobe nfsd- Boot DNS server

docker run -d --hostname resolvable -v /var/run/docker.sock:/tmp/docker.sock -v /etc/resolv.conf:/tmp/resolv.conf mgood/resolvable- Boot NFS servers

docker run -d --name nfshome --privileged cpuguy83/nfs-server /exports docker run -d --name nfsopt --privileged cpuguy83/nfs-server /exports- Boot SGE master

docker run -d -h sgemaster --name sgemaster --privileged --link nfshome:nfshome --link nfsopt:nfsopt wtakase/sge-master:ubuntu- Boot SGE workers

docker run -d -h sgeworker01 --name sgeworker01 --privileged --link sgemaster:sgemaster --link nfshome:nfshome --link nfsopt:nfsopt wtakase/sge-worker:ubuntu docker run -d -h sgeworker02 --name sgeworker02 --privileged --link sgemaster:sgemaster --link nfshome:nfshome --link nfsopt:nfsopt wtakase/sge-worker:ubuntu- Submit job

docker exec -u sgeuser -it sgemaster bash -c '. /etc/profile.d/sge.sh; echo "/bin/hostname" | qsub' docker exec -u sgeuser -it sgemaster cat /home/sgeuser/STDIN.o1

Nov 08, 2018 | liv.ac.uk

I installed SGE on Centos 7 back in January this year. If my recolection is correct, the procedure was analogous to the instructions for Centos 6. There were some issues with the firewalld service (make sure that it is not blocking SGE), as well as some issues with SSL.

Check out these threads for reference:http://arc.liv.ac.uk/pipermail/sge-discuss/2017-January/001050.html

Max

Sep 07, 2018 | auckland.ac.nz

Experiences with Sun Grid Engine

In October 2007 I updated the Sun Grid Engine installed here at the Department of Statistics and publicised its presence and how it can be used. We have a number of computation hosts (some using Māori fish names as fish are often fast) and a number of users who wish to use the computation power. Matching users to machines has always been somewhat problematic.

Fortunately for us, SGE automatically finds a machine to run compute jobs on . When you submit your job you can define certain characteristics, eg, the genetics people like to have at least 2GB of real free RAM per job, so SGE finds you a machine with that much free memory. All problems solved!

Let's find out how to submit jobs ! (The installation and administration section probably won't interest you much.)

I gave a talk on 19 February 2008-02-19 to the Department, giving a quick overview of the need for the grid and how to rearrange tasks to better make use of parallelism.

- Installation

- Administration

- Submitting jobs

- Thrashing the Grid

- Advanced methods of queue submission

- Talk at Department Retreat

- Talk for Department Seminar

- Summary

Installation

My installation isn't as polished as Werner's setup, but it comes with more carrots and sticks and informational emails to heavy users of computing resources.

For this very simple setup I first selected a master host, stat1. This is also the submit host. The documentation explains how to go about setting up a master host.

Installation for the master involved:

- Setting up a configuration file, based on the default configuration.

- Uncompressing the common and architecture-specific binaries into /opt/sge

- Running the installation. (Correcting mistakes, running again.)

- Success!

With the master setup I was ready to add compute hosts. This procedure was repeated for each host. (Thankfully a quick for loop in bash with an ssh command made this step very easy.)

- Login to the host

- Create

/opt/sge.- Uncompress the common and architecture-specific binaries into

/opt/sge- Copy across the cluster configuration from

/opt/sge/default/common. (I'm not so sure on this step, but I get strange errors if I don't do this.)- Add the host to the cluster. (Run qhost on the master.)

- Run the installation, using the configuration file from step 1 of the master. (Correcting mistakes, running again. Mistakes are hidden in

/tmp/install_execd.*until the installation finishes. There's a problem where if/opt/sge/default/common/install_logsis not writeable by the user running the installation then it will be silently failing and retrying in the background. Installation is pretty much instantaneous, unless it's failing silently.)

- As a sub-note, you receive architecture errors on Fedora Core. You can fix this by editing

/opt/sge/util/archand changing line 248 that reads3|4|5)to3|4|5|6).- Success!

If you are now to run qhost on some host, eg, the master, you will now see all your hosts sitting waiting for instructions.

Administration

The fastest way to check if the Grid is working is to run qhost , which lists all the hosts in the Grid and their status. If you're seeing hyphens it means that host has disappeared. Is the daemon stopped, or has someone killed the machine?

The glossiest way to keep things up to date is to use qmon . I have it listed as an application in X11.app on my Mac. The application command is as follows. Change 'master' to the hostname of the Grid master. I hope you have SSH keys already setup.

ssh master -Y . /opt/sge/default/common/settings.sh \; qmonWant to gloat about how many CPUs you have in your cluster? (Does not work with machines that have > 100 CPU cores.)

admin@master:~$ qhost | sed -e 's/^.\{35\}[^0-9]\+//' | cut -d" " -f1Adding Administrators

SGE will probably run under a user you created it known as "sgeadmin". "root" does not automatically become all powerful in the Grid's eyes, so you probably want to add your usual user account as a Manager or Operator. (Have a look in the manual for how to do this.) It will make your life a lot easier.

Automatically sourcing environment

Normally you have to manually source the environment variables, eg, SGE_ROOT, that make things work. On your submit hosts you can have this setup to be done automatically for you.

Create links from /etc/profile.d to the settings files in /opt/sge/default/common and they'll be automatically sourced for bash and tcsh (at least on Redhat).

Slots

The fastest processing you'll do is when you have one CPU core working on one problem. This is how the Grid is setup by default. Each CPU core on the Grid is a slot into which a job can be put.

If you have people logging on to the machines and checking their email, or being naughty and running jobs by hand instead of via the Grid engine, these calculations get mucked up. Yes, there still is a slot there, but it is competing with something being run locally. The Grid finds a machine with a free slot and the lowest load for when it runs your job so this won't be a problem until the Grid is heavily laden.

Setting up queues

Queues are useful for doing crude prioritisation. Typically a job gets put in the default queue and when a slot becomes free it runs.

If the user has access to more than one queue, and there is a free slot in that queue, then the job gets bumped into that slot.

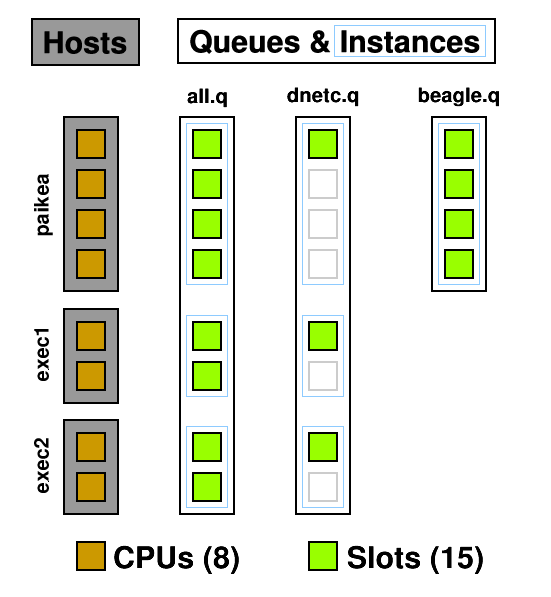

A queue instance is the queue on a host that it can be run on. 10 hosts, 3 queues = 30 queue instances. In the below example you can see three queues and seven queue instances : all.q@paikea, dnetc.q@paikea, beagle.q@paikea, all.q@exec1, dnetc.q@exec1, all.q@exec2, dnetc.q@exec2. Each queue can have a list of machines it runs on so, for example, the heavy genetics work in beagle.q can be run only on the machines attached to the SAN holding the genetics data. A queue does not have to include all hosts, ie, @allhosts.)

From this diagram you can see how CPUs can become oversubscribed. all.q covers every CPU. dnetc.q covers some of those CPUs a second time. Uh-oh! (dnetc.q is setup to use one slot per queue instance. That means that even if there are 10 CPUs on a given host, it will only use 1 of those.) This is something to consider when setting up queues and giving users access to them. Users can't put jobs into queues they don't have access to, so the only people causing contention are those with access to multiple queues but don't specify a queue ( -q ) when submitting.

Another use for queues are subordinate queues . I run low priority jobs in dnetc.q. When the main queue gets busy, all the jobs in dnetc.q are suspended until the main queue's load decreases. To do this I edited all.q, and under Subordinates added dnetc.q.

So far the shortest queue I've managed to make is one that uses 1 slot on each host it is allowed to run on. There is some talk in the documentation regarding user defined resources ( complexes ) which, much like licenses, can be "consumed" by jobs, thus limiting the number of concurrent jobs that can be run. (This may be useful for running an instance of Folding@Home, as it is not thread-safe , so you can set it up with a single "license".)

You can also change the default nice value of processes, but possibly the most useful setting is to turn on "rerunnable", which allows a task to be killed and run again on a different host.

Parallel Environment

Something that works better than queues and slots is to set up a

parallel environment. This can have a limited number of slots which counts over the entire grid and over every queue instance. As an example, Folding@Home is not thread safe. Each running thread needs its own work directory.How can you avoid contention in this case? Make each working directory a parallel environment, and limit the number of slots to 1.

I have four working directories named fah-a to fah-d . Each contains its own installation of the Folding@Home client:

$ ls ~/grid/fah-a/ fah-a client.cfg FAH504-Linux.exe workFor each of these directories I have created a parallel environment:

admin@master:~$ qconf -sp fah-a pe_name fah-a slots 1 user_lists fahThese parallel environments are made available to all queues that the job can be run in and all users that have access to the working directory - which is just me.

The script to run the client is a marvel of grid arguments. It requests the parallel environment, bills the job to the Folding@Home project, names the project, etc. See for yourself:

#!/bin/sh # use bash #$ -S /bin/sh # current directory #$ -cwd # merge output #$ -j y # mail at end #$ -m e # project #$ -P fah # name in queue #$ -N fah-a # parallel environment #$ -pe fah-a 1 ./FAH504-Linux.exe -oneunitNote the -pe argument that says this job requires one slot worth of fah-a please.

Not a grid option, but the -oneunit flag for the folding client is important as this causes the job to quit after one work unit and the next work unit can be shuffled around to an appropriate host with a low load whose queue isn't disabled. Otherwise the client could end up running in a disabled queue for a month without nearing an end.

With the grid taking care of the parallel environment I no longer need to worry about manually setting up job holds so that I can enqueue multiple units for the same work directory. -t 1-20 ahoy!

Complex Configuration

An alternative to the parallel environment is to use a Complex. You create a new complex, say how many slots are available, and then let people consume them!

- In the QMON Complex Configuration, add a complex called "fah_l", type INT, relation <=, requestable YES, consumable YES, default 0. Add, then Commit.

- I can't manage to get this through QMON, so I do it from the command line. qconf -me global and then add fah_l=1 to the complex_values.

- Again through the command line. qconf -mq all.q and then add fah_l=1 to the complex_values. Change this value for the other queues. (Note that a value of 0 means jobs requesting this complex cannot be run in this queue.)

- When starting a job, add -l fah_l=1 to the requirements.

I had a problem to start off with, where qstat was telling me that -25 licenses were available. However this is due to the default value, so make sure that is 0!

Using Complexes I have set up license handling for Matlab and Splus .

- -l splus=1 (to request a Splus license)

- -l matlab=1 (to request a Matlab license)

- -l ml=1,matlabc=1 (to request a Matlab license and a Matlab Compiler license)

- -l ml=1,matlabst=1 (to request a Matlab license and a Matlab Statistics Toolbox license)

As one host group does not have Splus installed on them I simply set that host group to have 0 Splus licenses available. A license will never be available on the @gradroom host group, thus Splus jobs will never be queued there.

Quotas

Instead of Complexes and parallel environments, you could try a quota!

Please excuse the short details:

admin@master$ qconf -srqsl admin@master$ qconf -mrqs lm2007_slots { name lm2007_slots description Limit the lm2007 project to 20 slots across the grid enabled TRUE limit projects lm2007 to slots=20 }Pending jobs

Want to know why a job isn't running?

- Job Control

- Pending Jobs

- Select a job

- Why ?

This is the same as qstat -f , shown at the bottom of this page.

Using Calendars

A calendar is a list of days and times along with states: off or suspended. Unless specified the state is on.

A queue, or even a single queue instance, can have a calendar attached to it. When the calendar says that the queue should now be "off" then the queue enters the disabled (D) state. Running jobs can continue, but no new jobs are started. If the calendar says it should be suspended then the queue enters the suspended (S) state and all currently running jobs are stopped (SIGSTOP).

First, create the calendar. We have an upgrade for paikea scheduled for 17 January:

admin@master$ qconf -scal paikeaupgrade calendar_name paikeaupgrade year 17.1.2008=off week NONEBy the time we get around to opening up paikea's case and pull out the memory jobs will have had several hours to complete after the queue is disabled. Now, we have to apply this calendar to every queue instance on this host. You can do this all through qmon but I'm doing it from the command line because I can. Simply edit the calendar line to append the hostname and calendar name:

admin@master$ qconf -mq all.q ... calendar NONE,[paikea=paikeaupgrade] ...Repeat this for all the queues.

There is a user who likes to use one particular machine and doesn't like jobs running while he's at the console. Looking at the usage graphs I've found out when he is using the machine and created a calendar based on this:

admin@master$ qconf -scal michael calendar_name michael year NONE week mon-sat=13-21=offThis calendar is obviously recurring weekly. As in the above example it was applied to queues on his machine. Note that the end time is 21, which covers the period from 2100 to 2159.

Suspending jobs automatically

Due to the number of slots being equal to the number of processors, system load is theoretically not going to exceed 1.00 (when divided by the number of processors). This value can be found in the np_load_* complexes .

But (and this is a big butt) there are a number of ways in which the load could go past a reasonable level:

- There are interactive users: all of the machines in the grad room (@gradroom) have console access.

- Someone logged in when they should not have: we're planning to disable ssh access to @ngaika except for %admins.

- A job is multi-threaded, and the submitter didn't mention this. If your Java program is using 'new Thread' somewhere, then it's likely you'll end up using multiple CPUs. Request more than one CPU when you're submitting the job. (-l slots=4 does not work.)

For example, with paikea , there are three queues:

- all.q (4 slots)

- paikea.q (4 slots)

- beagle.q (overlapping with the other two queues)

all.q is filled first, then paikea.q. beagle.q, by project and owner restrictions, is only available to the sponsor of the hardware. When their jobs come in, they can get put into beagle.q, even if the other slots are full. When the load average comes up, other tasks get suspended: first in paikea.q, then in all.q.

Let's see the configuration:

qname beagle.q hostlist paikea.stat.auckland.ac.nz priority 19,[paikea.stat.auckland.ac.nz=15] user_lists beagle projects beagleWe have the limited access to this queue through both user lists and projects. Also, we're setting the Unix process priority to be higher than the other queues.

qname paikea.q hostlist paikea.stat.auckland.ac.nz suspend_thresholds NONE,[paikea.stat.auckland.ac.nz=np_load_short=1.01] nsuspend 1 suspend_interval 00:05:00 slots 0,[paikea.stat.auckland.ac.nz=4]The magic here being that suspend_thresholds is set to 1.01 for np_load_short. This is checked every 5 minutes, and 1 process is suspended at a time. This value can be adjusted to get what you want, but it seems to be doing the trick according to graphs and monitoring the load. np_load_short is chosen because it updates the most frequently (every minute), more than np_load_medium (every five), and np_load_long (every fifteen minutes).

all.q is fairly unremarkable. It just defines four slots on paikea.

Submitting jobs Jobs are submitted to the Grid using qsub . Jobs are shell scripts containing commands to be run.

If you would normally run your job by typing ./runjob , you can submit it to the Grid and have it run by typing: qsub -cwd ./runjob

Jobs can be submitted while logged on to any submit host: sge-submit.stat.auckland.ac.nz .

For all the commands on this page I'm going to assume the settings are all loaded and you are logged in to a submit host. If you've logged in to a submit host then they'll have been sourced for you. You can source the settings yourself if required: . /opt/sge/default/common/settings.sh - the dot and space at the front are important .

Depending on the form your job is currently in they can be very easy to submit. I'm just going to go ahead and assume you have a shell script that runs the CPU-intensive computations you want and spits them out to the screen. For example, this tiny test.sh :

#!/bin/sh expr 3 + 5This computation is very CPU intensive!

Please note that the Sun Grid Engine ignores the bang path at the top of the script and will simply run the file using the queue's default shell which is csh. If you want bash, then request it by adding the very cryptic line: #$ -S /bin/sh

Now, let's submit it to the grid for running: Skip submission output

user@submit:~$ qsub test.sh Your job 464 ("test.sh") has been submitted user@submit:~$ qstat job-ID prior name user state submit/start at queue slots ja-task-ID ------------------------------------------------------------------------------------------------------- 464 0.00000 test.sh user qw 01/10/2008 10:48:03 1There goes our job, waiting in the queue to be run. We can run qstat a few more times to see it as it goes. It'll be run on some host somewhere, then disappear from the list once it is completed. You can find the output by looking in your home directory: Skip finding output

user@submit:~$ ls test.sh* test.sh test.sh.e464 test.sh.o464 user@submit:~$ cat test.sh.o464 8The output file is named based on the name of the job, the letter o , and the number of the job.

If your job had problems running have a look in these files. They probably explain what went wrong.

Easiest way to submit R jobs

Here are two scripts and a symlink I created to make it easy as possible to submit R jobs to your Grid:

qsub-R

If you normally do something along the lines of:

user@exec:~$ nohup nice R CMD BATCH toodles.RNow all you need to do is:

user@submit:~$ qsub-R toodles.R Your job 3540 ("toodles.R") has been submittedqsub-R is linked to submit-R, a script I wrote. It calls qsub and submits a simple shell wrapper with the R file as an argument. It ends up in the queue and eventually your output arrives in the current directory: toodles.R.o3540

Download it and install it. You'll need to make the ' qsub-R ' symlink to ' 3rd_party/uoa-dos/submit-R ' yourself, although there is one in the package already for lx24-x86: qsub-R.tar (10 KiB, tar)

Thrashing the Grid

Sometimes you just want to give something a good thrashing, right? Never experienced that? Maybe it's just me. Anyway, here are two ideas for submitting lots and lots of jobs:

- Write a script that creates jobs and submits them

- Submit the same thing a thousand times

There are merits to each of these methods, and both of them mimic typical operation of the grid, so I'm going to explain them both.

Computing every permutation

If you have two lists of values and wish to calculate every permutation, then this method will do the trick. There's a more complicated solution below .

qsub will happily pass on arguments you supply to the script when it runs. Let us modify our test.sh to take advantage of this:

#!/bin/sh #$ -S /bin/sh echo Factors $1 and $2 expr $1 + $2Now, we just need to submit every permutation to Grid:

user@submit:~$ for A in 1 2 3 4 5 ; do for B in 1 2 3 4 5 ; do qsub test.sh $A $B ; done ; doneAway the jobs go to be computed. If we have a look at different jobs we can see that it works. For example, job 487 comes up with:

user@submit:~$ cat test.sh.?487 Factors 3 and 5 8Right on, brother! That's the same answer as we got previously when we hard coded the values of 3 and 5 into the file. We have algorithm correctness!

If we use qacct to look up the job information we find that it was computed on host mako (shark) and used 1 units of wallclock and 0 units of CPU.

Computing every permutation, with R

This method of creating job scripts and running them will allow you to compute every permutation of two variables. Note that you can supply arguments to your script, so it is not actually necessary to over-engineer your solution quite this much. This script has the added advantage of not clobbering previous computations. I wrote this solution for Yannan Jiang and Chris Wild and posted it to the r-downunder mailing list in December 2007. ( There is another method of doing this! )

In this particular example the output of the R command is deterministic, so it does not matter that a previous run (which could have taken days of computing time) gets overwritten, however I also work around this problem.

To start with I have my simple template of R commands (template.R):

alpha <- ALPHA beta <- c(BETA) # magic happens here alpha betaThe ALPHA and BETA parameters change for each time this simulation is run. I have these values stored, one per line, in the files ALPHA and BETA.

ALPHA:

0.9 0.8 0.7BETA (please note that these contents must work both in filenames, bash commands, and R commands):

0,0,1 0,1,0 1,0,0I have a shell script that takes each combination of ALPHA x BETA, creates a .R file based on the template, and submits the job to the Grid. This is called submit.sh:

#!/bin/sh if [ "X${SGE_ROOT}" == "X" ] ; then echo Run: . /opt/sge/default/common/settings.sh exit fi cat ALPHA | while read ALPHA ; do cat BETA | while read BETA ; do FILE="t-${ALPHA}-${BETA}" # create our R file cat template.R | sed -e "s/ALPHA/${ALPHA}/" -e "s/BETA/${BETA}/" > ${FILE}.R # create a script echo \#!/bin/sh > ${FILE}.sh echo \#$ -S /bin/sh >> ${FILE}.sh echo "if [ -f ${FILE}.Rout ] ; then echo ERROR: output file exists already ; exit 5 ; fi" >> ${FILE}.sh echo R CMD BATCH ${FILE}.R ${FILE}.Rout >> ${FILE}.sh chmod +x ${FILE}.sh # submit job to grid qsub -j y -cwd ${FILE}.sh done done qstatWhen this script runs it will, for each permutation of ALPHA and BETA,

- create an R file based on the template, filling in the values of ALPHA and BETA,

- create a script that checks if this permutation has been calculated and then calls R,

- submits this job to the queue

... and finally shows the jobs waiting in the queue to execute.

Once computation is complete you will have a lot of files waiting in your directory. You will have:

- template.R -- our R commands template

- t-ALPHA-BETA.sh -- generated shell script that calls R

- t-ALPHA-BETA.R -- generated (from template) R commands

- t-ALPHA-BETA.Rout -- output from the command; this is a quirk of R

- t-ALPHA-BETA.sh.oNNN -- any output or errors from job (merged using qsub -j y )

The output files, stderr and stdout from when R was run, are always empty (unless something goes terribly wrong). For each permutation we receive four files. There are nine permutations (n ALPHA = 3, n BETA = 3, 3 × 3 = 9). A total of 36 files are created. (This example has been pared down from the original for purposes of demonstration.)

My initial question to the r-downunder list was how to get the output from R to stdout and thus t-ALPHA-BETA.sh.oNNN instead of t-ALPHA-BETA.Rout, however in this particular case, I have dodged that. In fact, being deterministic it is better that this job writes its output to a known filename, so I can do a one line test to see if the job has already been run.

I should also point out the -cwd option to the qsub command, which causes the job to be run in the current directory (which if it is in your home directory is accessible in the same place on all machines), rather than in /tmp/* . This allows us to find the R output, since R writes it to the directory it is currently in. Otherwise it could be discarded as a temporary file once the job ends!

Submit the same thing a thousand times

Say you have a job that, for example, pulls in random numbers and runs a simulation, or it grabs a work unit from a server, computes it, then quits. ( FAH -oneunit springs to mind, although it cannot be run in parallel. Refer to the parallel environment setup .) The script is identical every time.

SGE sets the SGE_JOB_ID environment variable which tells you the job number. You can use this as some sort of crude method for generating a unique file name for your output. However, the best way is to write everything to standard output (stdout) and let the Grid take care of returning it to you.

There are also Array Jobs which are

identical tasks being differentiated only by an index number, available through the -t option on qsub . This sets the environment variable of SGE_TASK_ID.For this example I will be using the Distributed Sleep Server . The Distributed Sleep Project passes out work units, packages of time, to clients who then process the unit. The Distributed Sleep Client, dsleepc , connects to the server to fetch a work unit. They can then be processed using the sleep command. A sample script: Skip sample script

#!/bin/sh #$ -S /bin/sh WORKUNIT=`dsleepc` sleep $WORKUNIT && echo Processed $WORKUNIT secondsWork units of 300 seconds typically take about five minutes to complete, but are known to be slower on Windows. (The more adventurous can add the -bigunit option to get a larger package for themselves, but note that they take longer to process.)

So, let us submit an array job to the Grid. We are going to submit one job with 100 tasks, and they will be numbered 1 to 100:

user@submit:~$ qsub -t 1-100 dsleep Your job-array 490.1-100:1 ("dsleep") has been submittedJob 490, tasks 1 to 100, are waiting to run. Later we can come back and pick up our output from our home directory. You can also visit the Distributed Sleep Project and check the statistics server to see if your work units have been received.

Note that running 100 jobs will fill the default queue, all.q. This has two effects. First, if you have any other queues that you can access jobs will be added to those queues and then run. (As the current setup of queues overlaps with CPUs this can lead to over subscription of processing resources. This can cause jobs to be paused, depending on how the queue is setup.) Second, any subordinate queues to all.q will be put on hold until the jobs get freed up.

Array jobs, with R

Using the above method of submitting multiple jobs, we can access this and use it in our R script, as follows: Skip R script

# alpha+1 is found in the SGE TASK number (qsub -t) alphaenv <- Sys.getenv("SGE_TASK_ID") alpha <- (as.numeric(alphaenv)-1)Here the value of alpha is being pulled from the task number. Some manipulation is done of it, first to turn it from a string into a number, and secondly to change it into the expected form. Task numbers run from 1+, but in this case the code wants them to run from 0+.

Similar can be done with Java, by adding the environment value as an argument to invocation of the main class.

Advanced methods of queue submission

When you submit your job you have a lot of flexibility over it. Here are some options to consider that may make your life easier. Remember you can always look in the man page for qsub for more options and explanations.

qsub -N timmy test.shHere the job is called "timmy" and runs the script test.sh . Your the output files will be in timmy.[oe]*

The working directory is usually somewhere in /tmp on the execution host. To use a different working directory, eg, the current directory, use -cwd

qsub -cwd test.shTo request specific characteristics of the execution host, for example, sufficient memory, use the -l argument.

qsub -l mem_free=2500M test.shThis above example requests 2500 megabytes (M = 1024x1024, m = 1000x1000) of free physical memory (mem_free) on the remote host. This means it won't be run on a machine that has 2.0GB of memory, and will instead be put onto a machine with sufficient amounts of memory for BEAGLE Genetic Analysis . There are two other options for ensuring you get enough memory:

- Submit your job to the BEAGLE queue: -q beagle.q . This queue is specifically setup only on machines with a lot of free memory, and has 10 slots. You do have to be one of the allowed BEAGLE users to put jobs into this queue.

- Specify the amount of memory required in your script:

#$ -l mem=2500If your binary is architecture dependent you can ask for a particular architecture.

qsub -l arch=lx24-amd64 test.binThis can also be done in the script that calls the binary so you don't accidentally forget about including it.

#$ -l arch=lx24-amd64This requesting of resources can also be used to ask for a specific host, which goes against the idea of using the Grid to alleviate finding a host to use! Don't do this!

qsub -l hostname=mako test.shIf your job needs to be run multiple times then you can create an array job. You ask for a job to be run several times, and each run (or task) is given a unique task number which can be accessed through the environment variable SGE_TASK_ID. In each of these examples the script is run 50 times:

qsub -t 1-50 test.sh qsub -t 75-125 test.shYou can request a specific queue. Different queues have different characteristics.

- lm2007.q uses a maximum of one slot per host (although once I figure out how to configure it, it will use a maximum of six slots Grid-wide).

- dnetc.q is suspended when the main queue (all.q) is busy.

- beagle.q runs only on machines with enough memory to handle the data (although this can be requested with -l , as shown above).

qsub -q dnetc.q test.shA job can be held until a previous job completes. For example, this job will not run until job 380 completes:

qsub -hold_jid 380 test.shCan't figure out why your job isn't running? qstat can tell you:

qstat -j 490 ... lots of output ... scheduling info: queue instance "[email protected]" dropped because it is temporarily not available queue instance "[email protected]" dropped because it is full cannot run in queue "all.q" because it is not contained in its hard queue list (-q)Requesting licenses

Should you be using software that requires licenses then you should specify this when you submit the job. We have two licenses currently set up but can easily add more as requested:

- -l splus=1 (to request a Splus license)

- -l matlab=1 (to request a Matlab license)

- -l ml=1,matlabc=1 (to request a Matlab license and a Matlab Compiler license)

- -l ml=1,matlabst=1 (to request a Matlab license and a Matlab Statistics Toolbox license)

The Grid engine will hold your job until a Splus license or Matlab license becomes available.

Note: The Grid engine keeps track of the license pool independently of the license manager. If someone is using a license that the Grid doesn't know about, eg, an interactive session you left running on your desktop, then the count will be off. Believing a license is available, the Grid will run your job, but Splus will not run and your job will end. Here is a job script that will detect this error and then allow your job to be retried later: Skip Splus script

#!/bin/sh #$ -S /bin/bash # run in current directory, merge output #$ -cwd -j y # name the job #$ -N Splus-lic # require a single Splus license please #$ -l splus=1 Splus -headless < $1 RETVAL=$? if [ $RETVAL == 1 ] ; then echo No license for Splus sleep 60 exit 99 fi if [ $RETVAL == 127 ] ; then echo Splus not installed on this host # you could try something like this: #qalter -l splus=1,h=!`hostname` $JOB_ID sleep 60 exit 99 fi exit $RETVALPlease note that the script exits with code 99 to tell the Grid to reschedule this job (or task) later. Note also that the script, upon receiving the error, sleeps for a minute before exiting, thus slowing the loop of errors as the Grid continually reschedules the job until it runs successfully. Alternatively you can exit with error 100, which will cause the job to be held in the error (E) state until manually cleared to run again.

You can clear a job's error state by using qmod -c jobid .

Here's the same thing for Matlab. Only minor differences from running Splus: Skip Matlab script

#!/bin/sh #$ -S /bin/sh # run in current directory, merge output #$ -cwd -j y # name the job #$ -N ml # require a single Matlab license please #$ -l matlab=1 matlab -nodisplay < $1 RETVAL=$? if [ $RETVAL == 1 ] ; then echo No license for Matlab sleep 60 exit 99 fi if [ $RETVAL == 127 ] ; then echo Matlab not installed on this host, `hostname` # you could try something like this: #qalter -l matlab=1,h=!`hostname` $JOB_ID sleep 60 exit 99 fi exit $RETVALSave this as "run-matlab". To run your matlab.m file, submit with: qsub run-matlab matlab.m

Processing partial parts of input files in Java

Here is some code I wrote for Lyndon Walker to process a partial dataset in Java.

It comes with two parts: a job script that passes the correct arguments to Java, and some Java code that extracts the correct information from the dataset for processing.

First, the job script gives some Grid task environment variables to Java. Our job script is merely translating from the Grid to the simulation:

java Simulation $@ $SGE_TASK_ID $SGE_TASK_LASTThis does assume your shell is bash, not csh. If your job is in 10 tasks, then SGE_TASK_ID will be a number between 1 and 10, and SGE_TASK_LAST will be 10. I'm also assuming that you are starting your jobs from 1, but you can also change that setting and examine SGE_TASK_FIRST.

Within Java we now read these variables and act upon them:

sge_task_id = Integer.parseInt(args[args.length-2]); sge_task_last = Integer.parseInt(args[args.length-1]);For a more complete code listing, refer to sun-grid-qsub-java-partial.java (Simulation.java).

Preparing confidential datasets

The Grid setup here includes machines on which users can login. That creates the problem where someone might be able to snag a confidential dataset that is undergoing processing. One particular way to keep the files secure is as follows:

- Check we are able to securely delete files

- Locate a safe place to store files locally (the Grid sets up ${TMPDIR} to be unique for this job and task)

- Copy over dataset; it is expected you have setup password-less scp

- Preprocess dataset, eg, unencrypt

- Process dataset

- Delete dataset

A script that does this would look like the following: Skip dataset preparation script

#!/bin/sh #$ -S /bin/sh DATASET=confidential.csv # check our environment umask 0077 cd ${TMPDIR} chmod 0700 . # find srm SRM=`which srm` NOSRM=$? if [ $NOSRM -eq 1 ] ; then echo system srm not found on this host, exiting >> /dev/stderr exit 99 fi # copy files from data store RETRIES=0 while [ ${RETRIES} -lt 5 ] ; do ((RETRIES++)) scp user@filestore:/store/confidential/${DATASET} . if [ $? -eq 0 ] ; then RETRIES=5000 else # wait for up to a minute (MaxStartups 10 by default) sleep `expr ${RANDOM} / 542` fi done if [ ! -f ${DATASET} ] ; then # unable to copy dataset after 5 retries, quit but retry later echo unable to copy dataset from store >> /dev/stderr exit 99 fi # if you were decrypting the dataset, you would do that here # copy our code over too cp /mount/code/*.class . # process data java Simulation ${DATASET} # collect results # (We are just printing to the screen.) # clean up ${SRM} -v ${DATASET} >> /dev/stderr echo END >> /dev/stderrCode will need to be adjusted to match your particular requirements, but the basic form is sketched out above.

As the confidential data is only in files and directories that root and the running user can access, and the same precaution is taken with the datastore, then only the system administrator and the user who has the dataset has access to these files.

The one problem here is how to manage the password-less scp securely. As this is run unattended, it would not be possible to have a password on a file, nor to forward authentication to some local agent. It may be possible to grab the packets that make up the key material. There must be a better way to do this. Remember that the job script is stored world-readable in the Grid cell's spool, so nothing secret can be put in there either.

Talk at Department Retreat

I gave a talk about the Sun Grid Engine on 19 February 2008-02-19 to the Department, giving a quick overview of the need for the grid and how to rearrange tasks to better make use of parallelism. It was aimed at end users and summarises into neat slides the reason for using the grid engine as well as a tutorial and example on how to use it all.

Download: Talk (with notes) PDF 5.9MiB

Question time afterwards was very good. Here are, as I recall them, the questions and answers.

Which jobs are better suited to parallelism?

Q (Ross Ihaka): Which jobs are better suited to parallelism? (Jobs with large data sets do not lend themselves to this sort of parallelism due to I/O overheads.)

A: Most of the jobs being used here are CPU intensive. The grid copies your script to /tmp on the local machine on which it runs. You could copy your data file across as well at the start of the job, thus all your later I/O is local.

(This is a bit of a poor answer. I wasn't really expecting it.) Bayesian priors and multiple identical simulations (eg, MCMC differing only by random numbers) lend themselves well to being parallelised.

Can I make sure I always run on the fastest machine?

A: The grid finds the machine with the least load to run jobs on. If you pile all jobs onto one host, then that host will slow down and become the slowest overall. Submit it through the grid and some days you'll get the fast host, and some days you'll get the slow host, and it is better in the long run. Also it is fair for other users. You can force it with -l, however, it is selfish.

Preemptable queues?

Q (Nicholas Horton): Is there support for preemptable queues? A person who paid for a certain machine might like it to be available only to them when they require it all for themselves.

A: Yes, the Grid has support for queues like that. It can all be configured. This particular example will have to be looked in to further. Beagle.q, as an example, only runs on paikea and overlaps with all.q . Also when the load on paikea , again using that as an example, gets too high, jobs in a certain queue (dnetc.q) are stopped.

An updated answer: the owner of a host can have an exclusive queue that preempts the other queues on the host. When the system load is too high, less important jobs can be suspended using suspend_thresholds .

Is my desktop an execution host?

Q (Ross Ihaka): Did I see my desktop listed earlier?

A: No. So far the grid is only running on the servers in the basement and the desktops in the grad room. Desktops in staff offices and used by PhD candidates will have to opt in.

(Ross Ihaka) Offering your desktop to run as an execution host increases the total speed of the grid, but your desktop may run slower at times. It is a two way street.

Is there job migration?

A: It's crude, and depends on your job. If something goes wrong (eg, the server crashes, power goes out) your job can be restarted on another host. When queue instances become unavailable (eg, we're upgrading paikea) they can send a signal to your job, telling it to save its work and quit, then can be restarted on another host.

Migration to faster hosts

Q (Chris Wild): What happens if a faster host becomes available while my job is running?

A: Nothing. Your job will continue running on the host it is on until it ends. If a host is overloaded, and not due to the grid's fault, some jobs can be suspended until load decreases . The grid isn't migrating jobs. The best method is to break your job down into smaller jobs, so that when the next part of the job is started it gets put onto what is currently the best available host.

Over sufficient jobs it will become apparent that the faster host is processing more jobs than a slower host.

Desktops and calendars

Q (Stephane Guindon): What about when I'm not at my desktop. Can I have my machine be on the grid then, and when I get to the desktop the jobs are migrated?

A: Yes, we can set up calendars so that at certain times no new jobs will be started on your machine. Jobs that are already running will continue until they end. (Disabling the queue.) Since some jobs run for days this can appear to have no influence on how many jobs are running. Alternatively jobs can be paused, which frees up the CPU, but leaves the job sitting almost in limbo. (Suspending the queue.) Remember the grid isn't doing migration. It can stop your job and run it elsewhere (if you're using the -notify option on submission and handling the USR1 signal).

Jobs under the grid

Q (Sharon Browning): How can I tell if a job is running under the grid's control? It doesn't show this under top .

A: Try ps auxf . You will see the job taking a lot of CPU time, the parent script, and above that the grid (sge_shepherd and sge_execd).

Talk for Department Seminar